Environment :

- 3050 Ti

- Cuda 12.5 (latest drivers)

- Windows 11

- Miniconda 3

I’ve been trying to make PyTorch Cuda work for two days but I have been unable to.

torch.cuda.is_available()

Will always return false.

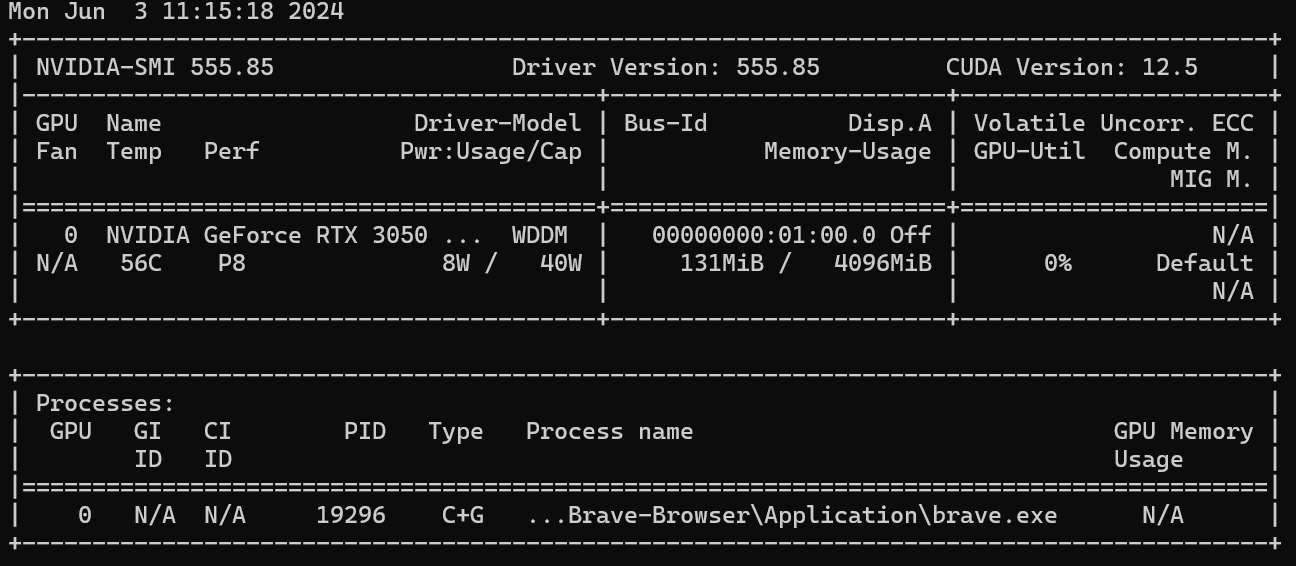

Nvidia-smi returns

I have simply ran this command :

conda install pytorch torchvision torchaudio pytorch-cuda=12.4 -c pytorch -c nvidia

I have tried different versions (12.1, 12.4) and also tried to reinstall my NVidia drivers or the miniconda environment.

I am trying to run this on my laptop which has 2 GPU, one from the NVIDIA card with Cuda and one from the AMD CPU/GPU for low energy consumption.

When I try to get the environment information it is missing Cuda for Pytorch :

Collecting environment information…

PyTorch version: 2.3.0

Is debug build: False

CUDA used to build PyTorch: Could not collect

ROCM used to build PyTorch: N/AOS: Microsoft Windows 11 Pro

GCC version: Could not collect

Clang version: Could not collect

CMake version: Could not collect

Libc version: N/APython version: 3.12.3 | packaged by Anaconda, Inc. | (main, May 6 2024, 19:42:21) [MSC v.1916 64 bit (AMD64)] (64-bit runtime)

Python platform: Windows-11-10.0.22631-SP0

Is CUDA available: False

CUDA runtime version: 12.5.40

CUDA_MODULE_LOADING set to: N/A

GPU models and configuration: GPU 0: NVIDIA GeForce RTX 3050 Ti Laptop GPU

Nvidia driver version: 555.85

cuDNN version: Could not collect

HIP runtime version: N/A

MIOpen runtime version: N/A

Is XNNPACK available: True

I can run software that uses Cuda without issue (cuda-z or a few graphics softwares) so I know the drivers doesn’t have an issue.

The only time I managed somehow to have the environment setup properly, any Cuda calls would hang forever.

Is there a problem of version ? What can I do to make this work ?