Hi everyone, long-time reader, first-time poster!

I seem to be running into an odd bug when training my model. I am trying to train an embedding model using the Resnet18 architecture, which I have essentially cut off the last linear layer of (I can post the architecture if needed). I have written my own loss function which implements the “Batch-Hard” loss found in this paper. The training loop (standard zero_grad, output, loss, backward, step) works fine when running on CPU, but throws the following error when running on GPU:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-11-8cf2cfddbcc6> in <module>()

3 output = learner.model(imgs)

4 loss = criterion(output, labels)

----> 5 loss.backward()

6 optimizer.step()

7 break

.../anaconda/anaconda3-2019a/lib/python3.6/site-packages/torch/tensor.py in backward(self, gradient, retain_graph, create_graph)

105 products. Defaults to ``False``.

106 """

--> 107 torch.autograd.backward(self, gradient, retain_graph, create_graph)

108

109 def register_hook(self, hook):

.../anaconda/anaconda3-2019a/lib/python3.6/site-packages/torch/autograd/__init__.py in backward(tensors, grad_tensors, retain_graph, create_graph, grad_variables)

91 Variable._execution_engine.run_backward(

92 tensors, grad_tensors, retain_graph, create_graph,

---> 93 allow_unreachable=True) # allow_unreachable flag

94

95

RuntimeError: CUDA error: invalid configuration argument

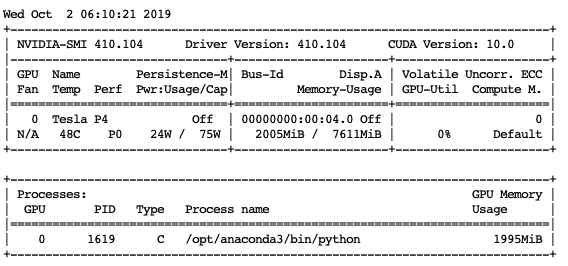

Does anyone know why a backwards pass would work on a CPU but not a GPU? I can confirm that my GPU works generally, I have trained other models with other custom loss functions.

For reference, this is the implementation of the “Batch-Hard” loss that I’ve written:

class BatchHardLoss(torch.nn.modules.loss._Loss):

def __init__(self, margin, k=5, *args, **kwargs):

super().__init__(*args, **kwargs)

self.margin = margin

self._k = k

def forward(self, x, targets):

distances = torch.cdist(x, x) # Get the distances

losses = []

for t in torch.unique(targets):

pos_idxs = (targets == t).nonzero().reshape(-1) # Positive indices

neg_idxs = (targets != t).nonzero().reshape(-1) # Negative indices

choice = np.random.choice(

len(pos_idxs), min(self._k, len(pos_idxs))

).tolist()

anchors = pos_idxs[choice] # Chosen anchors

# Only compute loss if we can do it for the full batch, for simplicity

loss = 0.0

if len(anchors) > 1 and len(neg_idxs) > 0:

pos_distances = torch.index_select(

torch.index_select(distances, 0, anchors), 1, pos_idxs

)

pos_loss = pos_distances.max(dim=1)[0].sum()

neg_distances = torch.index_select(

torch.index_select(distances, 0, anchors), 1, neg_idxs

)

neg_loss = neg_distances.min(dim=1)[0].sum()

marg_loss = self.margin * len(anchors)

losses.append(marg_loss + pos_loss - neg_loss)

else:

losses.append(torch.tensor(0.0, device=x.device, requires_grad=True))

return torch.stack(losses).sum()

If anyone sees any glaring errors or has any insight, I’d really appreciate it.

Thanks!

P.S. I have seen this post but can’t seem to find a similar issue when debugging.