gudiandian

August 21, 2021, 10:54am

1

I wish to train multiple models in turn in one python script. After the training of one model, I will run this to release memory, so that there will be enough memory for the training of the next model:

def destruction(self):

torch.cuda.synchronize(device=self._get_device())

dist.destroy_process_group(group=self.group)

del self.optimizer

del self.ddp_model

del self.train_loader

torch.cuda.set_device(device=self._get_device())

torch.cuda.empty_cache()

torch.cuda.synchronize(device=self._get_device())

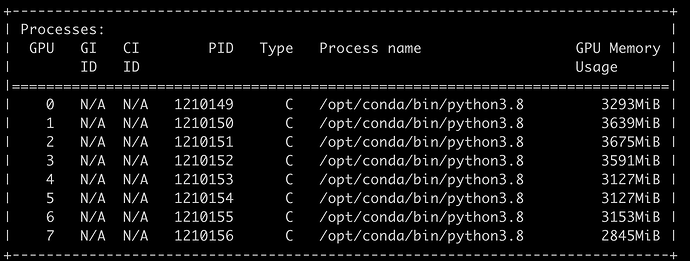

However, from nvidia-smi, I see that after calling destruction() each time, there was still some GPU memory allocated. And the unreleased memory increase as I train more model. For example, after training the 3rd model and calling destruction(), the memory allocation is like this:

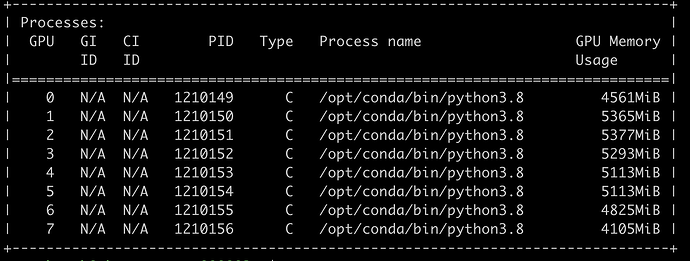

Then, after training the 4th model, the memory allocation is like this:

Finally, this leads to OOM error in training.

Did I miss out some step to clear unused CUDA memory? Or did I forget to delete anything that remained in CUDA memory? I would really appreciate any help!

torch.cuda.empty_cache() would free the cached memory so that other processes could reuse it.

Based on the reported issue I would assume that you haven’t deleted all references to the model, activations, optimizers, etc. so that some tensors are still alive.

1 Like

Virus_Proton

September 28, 2023, 2:15pm

3

What should I do to free cuda memory in this situation? I have the similar problem while running diffusion model training. At the end of the training I tried to free up the used gpu using torch.cuda.empty_cache() after deleting all the models but it didn’t work out for me.

My code base follows something like this,

del pipeline, vae, unet

torch.cuda.empty_cache()

Can you help me with this @ptrblck

ptrblck

September 28, 2023, 3:46pm

4

Deleting all object and freeing the cache should work as seen in this simple example , so you might need to check if you are still holding references to some objects or if you would need to trigger the garbage collection.

Virus_Proton

September 28, 2023, 4:29pm

5

Thanks for your quick reply. I did some check with your example.

I run this simple code for testing and got this same behaviour [gpu memory is still not released],

import diffusers

from diffusers import StableDiffusionXLPipeline

pipeline = StableDiffusionXLPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0"

)

pipeline.to('cuda:0')

memory_stats()

# 13387.36279296875

# 13654.0

del pipeline

torch.cuda.empty_cache()

memory_stats()

#13387.36279296875

#13654.0

Am i missing something?

ptrblck

September 28, 2023, 4:46pm

6

I’m not familiar with the diffusers package, but see the expected behavior using e.g. torchvision models:

print(torch.cuda.memory_summary())

# |===========================================================================|

# | PyTorch CUDA memory summary, device ID 0 |

# |---------------------------------------------------------------------------|

# | CUDA OOMs: 0 | cudaMalloc retries: 0 |

# |===========================================================================|

# | Metric | Cur Usage | Peak Usage | Tot Alloc | Tot Freed |

# |---------------------------------------------------------------------------|

# | Allocated memory | 0 B | 0 B | 0 B | 0 B |

# | from large pool | 0 B | 0 B | 0 B | 0 B |

# | from small pool | 0 B | 0 B | 0 B | 0 B |

# |---------------------------------------------------------------------------|

# | Active memory | 0 B | 0 B | 0 B | 0 B |

# | from large pool | 0 B | 0 B | 0 B | 0 B |

# | from small pool | 0 B | 0 B | 0 B | 0 B |

# |---------------------------------------------------------------------------|

# | Requested memory | 0 B | 0 B | 0 B | 0 B |

# | from large pool | 0 B | 0 B | 0 B | 0 B |

# | from small pool | 0 B | 0 B | 0 B | 0 B |

# |---------------------------------------------------------------------------|

# | GPU reserved memory | 0 B | 0 B | 0 B | 0 B |

# | from large pool | 0 B | 0 B | 0 B | 0 B |

# | from small pool | 0 B | 0 B | 0 B | 0 B |

# |---------------------------------------------------------------------------|

# | Non-releasable memory | 0 B | 0 B | 0 B | 0 B |

# | from large pool | 0 B | 0 B | 0 B | 0 B |

# | from small pool | 0 B | 0 B | 0 B | 0 B |

# |---------------------------------------------------------------------------|

# | Allocations | 0 | 0 | 0 | 0 |

# | from large pool | 0 | 0 | 0 | 0 |

# | from small pool | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | Active allocs | 0 | 0 | 0 | 0 |

# | from large pool | 0 | 0 | 0 | 0 |

# | from small pool | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | GPU reserved segments | 0 | 0 | 0 | 0 |

# | from large pool | 0 | 0 | 0 | 0 |

# | from small pool | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | Non-releasable allocs | 0 | 0 | 0 | 0 |

# | from large pool | 0 | 0 | 0 | 0 |

# | from small pool | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | Oversize allocations | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | Oversize GPU segments | 0 | 0 | 0 | 0 |

# |===========================================================================|

model = models.resnet50()

model.to("cuda:0")

print(torch.cuda.memory_summary())

# |===========================================================================|

# | PyTorch CUDA memory summary, device ID 0 |

# |---------------------------------------------------------------------------|

# | CUDA OOMs: 0 | cudaMalloc retries: 0 |

# |===========================================================================|

# | Metric | Cur Usage | Peak Usage | Tot Alloc | Tot Freed |

# |---------------------------------------------------------------------------|

# | Allocated memory | 100073 KiB | 100073 KiB | 100073 KiB | 0 B |

# | from large pool | 82240 KiB | 82240 KiB | 82240 KiB | 0 B |

# | from small pool | 17833 KiB | 17833 KiB | 17833 KiB | 0 B |

# |---------------------------------------------------------------------------|

# | Active memory | 100073 KiB | 100073 KiB | 100073 KiB | 0 B |

# | from large pool | 82240 KiB | 82240 KiB | 82240 KiB | 0 B |

# | from small pool | 17833 KiB | 17833 KiB | 17833 KiB | 0 B |

# |---------------------------------------------------------------------------|

# | Requested memory | 100040 KiB | 100040 KiB | 100040 KiB | 0 B |

# | from large pool | 82240 KiB | 82240 KiB | 82240 KiB | 0 B |

# | from small pool | 17800 KiB | 17800 KiB | 17800 KiB | 0 B |

# |---------------------------------------------------------------------------|

# | GPU reserved memory | 120832 KiB | 120832 KiB | 120832 KiB | 0 B |

# | from large pool | 102400 KiB | 102400 KiB | 102400 KiB | 0 B |

# | from small pool | 18432 KiB | 18432 KiB | 18432 KiB | 0 B |

# |---------------------------------------------------------------------------|

# | Non-releasable memory | 20758 KiB | 28795 KiB | 86171 KiB | 65412 KiB |

# | from large pool | 20160 KiB | 28160 KiB | 74496 KiB | 54336 KiB |

# | from small pool | 598 KiB | 2011 KiB | 11675 KiB | 11076 KiB |

# |---------------------------------------------------------------------------|

# | Allocations | 320 | 320 | 320 | 0 |

# | from large pool | 18 | 18 | 18 | 0 |

# | from small pool | 302 | 302 | 302 | 0 |

# |---------------------------------------------------------------------------|

# | Active allocs | 320 | 320 | 320 | 0 |

# | from large pool | 18 | 18 | 18 | 0 |

# | from small pool | 302 | 302 | 302 | 0 |

# |---------------------------------------------------------------------------|

# | GPU reserved segments | 14 | 14 | 14 | 0 |

# | from large pool | 5 | 5 | 5 | 0 |

# | from small pool | 9 | 9 | 9 | 0 |

# |---------------------------------------------------------------------------|

# | Non-releasable allocs | 7 | 7 | 14 | 7 |

# | from large pool | 5 | 5 | 5 | 0 |

# | from small pool | 2 | 5 | 9 | 7 |

# |---------------------------------------------------------------------------|

# | Oversize allocations | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | Oversize GPU segments | 0 | 0 | 0 | 0 |

# |===========================================================================|

del model

torch.cuda.empty_cache()

print(torch.cuda.memory_summary())

# |===========================================================================|

# | PyTorch CUDA memory summary, device ID 0 |

# |---------------------------------------------------------------------------|

# | CUDA OOMs: 0 | cudaMalloc retries: 0 |

# |===========================================================================|

# | Metric | Cur Usage | Peak Usage | Tot Alloc | Tot Freed |

# |---------------------------------------------------------------------------|

# | Allocated memory | 0 B | 100073 KiB | 100073 KiB | 100073 KiB |

# | from large pool | 0 B | 82240 KiB | 82240 KiB | 82240 KiB |

# | from small pool | 0 B | 17833 KiB | 17833 KiB | 17833 KiB |

# |---------------------------------------------------------------------------|

# | Active memory | 0 B | 100073 KiB | 100073 KiB | 100073 KiB |

# | from large pool | 0 B | 82240 KiB | 82240 KiB | 82240 KiB |

# | from small pool | 0 B | 17833 KiB | 17833 KiB | 17833 KiB |

# |---------------------------------------------------------------------------|

# | Requested memory | 0 B | 100040 KiB | 100040 KiB | 100040 KiB |

# | from large pool | 0 B | 82240 KiB | 82240 KiB | 82240 KiB |

# | from small pool | 0 B | 17800 KiB | 17800 KiB | 17800 KiB |

# |---------------------------------------------------------------------------|

# | GPU reserved memory | 0 B | 120832 KiB | 120832 KiB | 120832 KiB |

# | from large pool | 0 B | 102400 KiB | 102400 KiB | 102400 KiB |

# | from small pool | 0 B | 18432 KiB | 18432 KiB | 18432 KiB |

# |---------------------------------------------------------------------------|

# | Non-releasable memory | 0 B | 43097 KiB | 147519 KiB | 147519 KiB |

# | from large pool | 0 B | 39104 KiB | 124160 KiB | 124160 KiB |

# | from small pool | 0 B | 6932 KiB | 23359 KiB | 23359 KiB |

# |---------------------------------------------------------------------------|

# | Allocations | 0 | 320 | 320 | 320 |

# | from large pool | 0 | 18 | 18 | 18 |

# | from small pool | 0 | 302 | 302 | 302 |

# |---------------------------------------------------------------------------|

# | Active allocs | 0 | 320 | 320 | 320 |

# | from large pool | 0 | 18 | 18 | 18 |

# | from small pool | 0 | 302 | 302 | 302 |

# |---------------------------------------------------------------------------|

# | GPU reserved segments | 0 | 14 | 14 | 14 |

# | from large pool | 0 | 5 | 5 | 5 |

# | from small pool | 0 | 9 | 9 | 9 |

# |---------------------------------------------------------------------------|

# | Non-releasable allocs | 0 | 12 | 28 | 28 |

# | from large pool | 0 | 6 | 10 | 10 |

# | from small pool | 0 | 6 | 18 | 18 |

# |---------------------------------------------------------------------------|

# | Oversize allocations | 0 | 0 | 0 | 0 |

# |---------------------------------------------------------------------------|

# | Oversize GPU segments | 0 | 0 | 0 | 0 |

# |===========================================================================|

Virus_Proton

September 29, 2023, 10:46am

7

Thanks @ptrblck this was really helpful. Finally I solved the problem.diffusers pipeline I have to delete individual components of diffusers and then run torch.cuda.empty_cache() which release all the cached memory.

Here is the full code explanation:

import torch

def memory_stats():

print(torch.cuda.memory_allocated()/1024**2)

print(torch.cuda.memory_cached()/1024**2)

import diffusers

from diffusers import StableDiffusionXLPipeline

pipeline = StableDiffusionXLPipeline.from_pretrained(

"stabilityai/stable-diffusion-xl-base-1.0"

)

pipeline.to('cuda:0')

memory_stats()

# 13387.36279296875

# 13654.0

del pipeline # this process didn't delete all the component from the memory

torch.cuda.empty_cache()

memory_stats()

#13387.36279296875

#13654.0

del pipeline.text_encoder, pipeline.text_encoder_2, pipeline.tokenizer, pipeline.tokenizer_2, pipeline.unet, pipeline.vae

torch.cuda.empty_cache()

memory_stats()

# 0.0

# 0.0

1 Like

s_ak

August 28, 2024, 5:29pm

8

Is there a solution for freeing the memory allocated by torch subroutines? Like torch.isin and torch.argsort for example? I am currently working in a jupyter notebook, and i am getting memory errors that otherwise aren’t there if i run torch.cuda.empty_cache() on a separate cell. This memory error could be due to jupyter but I am not sure how to figure out whether this is the case or not.

Could you clarify what “subroutines” mean in this context?

s_ak

August 28, 2024, 7:43pm

10

By subroutine I mean successive calls to torch.argsort and torch.isin in a loop.

There is nothing special in torch.argsort and torch.isin and deleting the outputs as well as clearing the cache works as described before:

import torch

print(torch.cuda.memory_allocated() / 1024**3)

# 0.0

print(torch.cuda.memory_reserved() / 1024**3)

# 0.0

# allocate 1GB

x = torch.randn(1024, 1024, 256, device="cuda")

print(torch.cuda.memory_allocated() / 1024**3)

# 1.0

print(torch.cuda.memory_reserved() / 1024**3)

# 1.0

for _ in range(10):

x_isin = torch.isin(x, 1.)

x_sorted_indices = torch.argsort(x)

print(torch.cuda.memory_allocated() / 1024**3)

# 3.25

print(torch.cuda.memory_reserved() / 1024**3)

# 7.251953125

del x_isin, x_sorted_indices

print(torch.cuda.memory_allocated() / 1024**3)

# 1.0

print(torch.cuda.memory_reserved() / 1024**3)

# 7.251953125

torch.cuda.empty_cache()

print(torch.cuda.memory_allocated() / 1024**3)

# 1.0

print(torch.cuda.memory_reserved() / 1024**3)

# 1.0