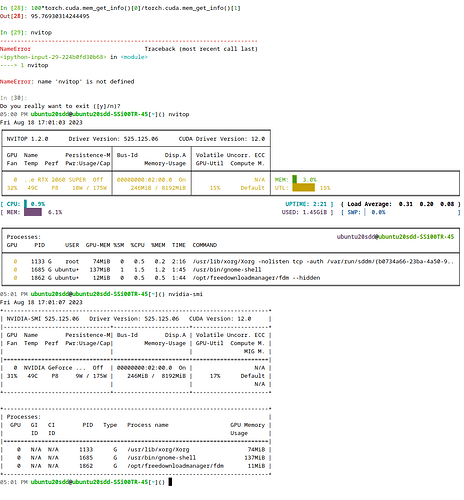

I am using AirSim with an ESPNet segmentation inference model on a GeForce RTX 2060 SUPER. It was working until CUDA started showing the OOM error. Despite nvitop and nvidia-smi showing free GPU memory, torch.cuda.mem_get_info() is showing that atleast 70% of my memory is filled (even going above 90% in sometimes).

I tried torch.cuda.empty_cache and gc.collect() outside the program but they aren’t helping me.

How can I free up my filled up memory?

What can be the reason torch.cuda.mem_get_info() is showing filled memory? Please suggest some solutions to free up GPU memory that’s occupied with previously ended programs.