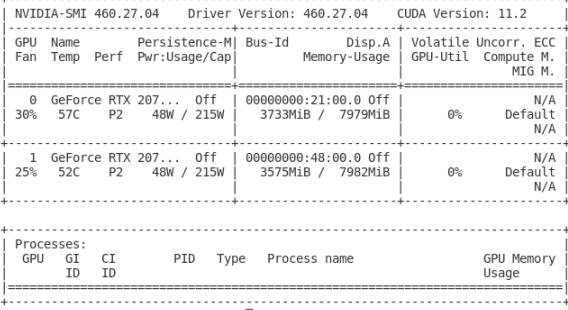

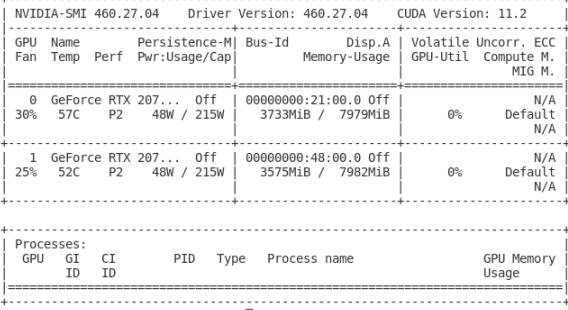

I have no PID working, so why does my cuda still have memory allocated?

I have no PID working, so why does my cuda still have memory allocated?

Are you sure this is a PyTorch related question? If so, why?

Look with htop or top for ghost processes that were not properly removed and kill them.

Or as mentioned here [1]: Open task manager(ctrl+alt+del) and then start releasing the memory by ending unnecessary tasks. This worked in my case. If you use colab, go to run time and select factory reset runtime

[1] Originally posted by @vignesh-creator in RuntimeError: CUDA out of memory. Tried to allocate 12.50 MiB (GPU 0; 10.92 GiB total capacity; 8.57 MiB already allocated; 9.28 GiB free; 4.68 MiB cached) · Issue #16417 · pytorch/pytorch · GitHub