Hi,

I am trying to run multiprocessing in my python program. I created two processes and passed a neural network in the one process and some heavy computational function in the other. I wanted the neural net to run on GPU and the other function on CPU and thereby I defined neural net using cuda() method. But when I run the program the got the following error:

RuntimeError: Cannot re-initialize CUDA in forked subprocess. To use CUDA with multiprocessing, you must use the ‘spawn’ start method

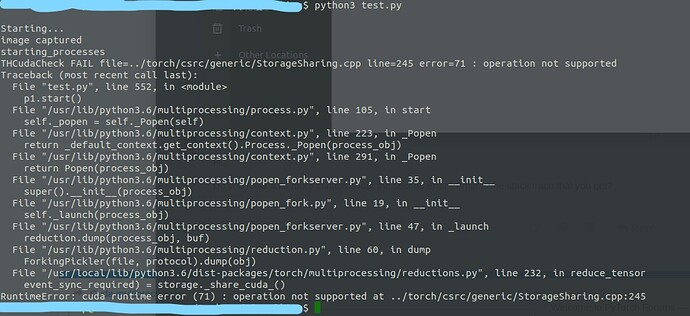

So I tried with spawn as well as forkserver start method, but then I got the other error:

RuntimeError: cuda runtime error (71) : operation not supported at …/torch/csrc/generic/StorageSharing.cpp:245

I have tried python3 multiprocessing and torch.multiprocessing both but nothing worked for me.