I am getting an out of memory error for CUDA when running the following code:

import torch

assert torch.cuda.is_available() == 1

x = torch.randn(1)

x.cuda() # RuntimeError: CUDA error: out of memory

Running on GeForce GTX 750, Ubuntu 18.04. How can there not be enough memory for one float?

1 Like

Could you check the current memory usage on the device via nvidia-smi and make sure that no other processes are running?

Note that besides the tensor you would need to allocate the CUDA context on the device, which might take a few hundred MBs.

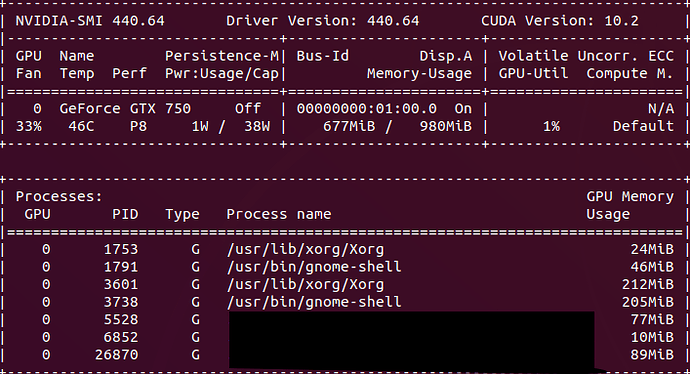

Thank you for the response. Here is the nvidia-smi output. It seems that I only have ~300 MB left while idle. I probably do not have enough vRAM for the CUDA context that you mention.

Yeah, your GPU doesn’t seem to have enough memory, so you could try to run the code on e.g. Colab.

Hello, … I’m getting same problem and I’m new on using local GPU.

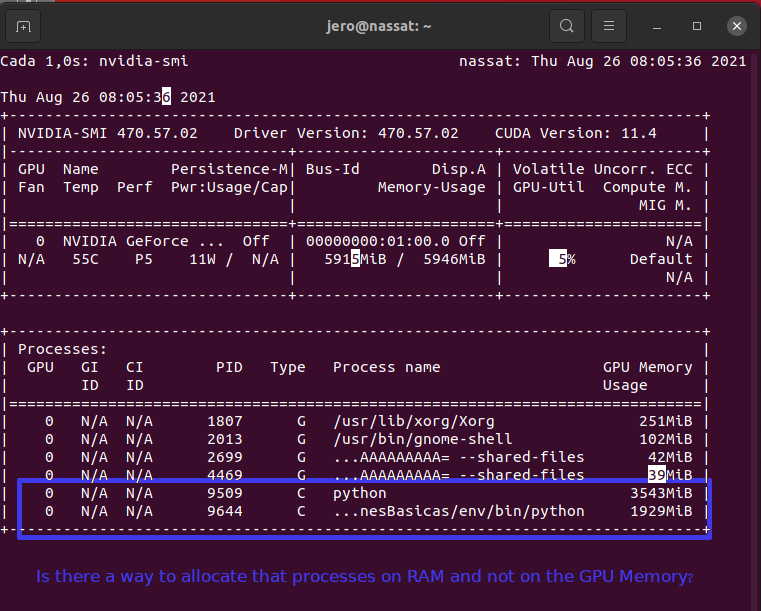

I’ve using an RTX 3060, and when launching my code, two processes, python and a service I run are allocated un the VRAM (not only the models…):

Thank you very much in advance

Are you seeing this memory usage by allocating a single scalar CUDATensor only?

Based on the GPU memory usage for the Python process I would assume that you are running a larger workload.

I don’t know what ...nesBasicas/env.bin/python executes, but would generally recommend to close all unnecessary processes so that your main training script can use as much memory as possible.

Thanks for your reply…

I’m loading 4 (“only four…”) BERT models… yes the four models are really large… I’m working on Emotive Computing.

I’m not training ,… I’ve trained on collab and now I’m only loading the models for predicting (for now) on my local machine, …

the last process is a flask web service that loads the models and make predictions.

As I’m newby eith CUDA on a local machine,…

The first question is:

- I’m doing it correctly or I’m making some mistakes? Can I move python and the WS to the CPU memory (I think it is not possible…)?

Thanks in advance

Jeronimo

Do you know how much memory a single model would take? Based on the description of your use case I guess you are working on an inference use case? My guess would be that 6GB are quite limited to host 4 BERT models, but it of course depends on the particular model architecture you are using.

Is this process loading other models as well, as it’s using GPU memory, too?

All PyTorch objects are created on the CPU by default. Unless you push them manually to the GPU via .cuda() or to('cuda') the GPU won’t be used.

I don’t know the memory usage of every model, but yes I need the 4 models to detect and infer emotions on texts (I use two “emotion classifier” models, one “sentimment analisys” model and one “toxocity classifier” model. May be 6 Gb is quite limmited.

No this process is the only one that loads the models, make predictions using the models and returns the result using JSON

Exactly,… I load the models and I move to the GPU using “model.to(device)” where device is a var that if is there a GPU stores ‘cuda:0’ value and ‘cpu’ in other situation.

But,… I assume that moving the models to the GPU the python ptrrocess is also moved to de GPU…

The Python process itself will not be moved to the GPU (GPUs cannot execute a Python engine) but it will initialize the CUDA context, load data (e.g. inputs, model parameters etc.) onto the GPU, and will launch CUDA kernels for the computation. nvidia-smi shows how much GPU memory the processes allocate on the device (the Python process itself is still being executed on the CPU).

So, is correct that the two last processes of the image are allocated there?

I don’t know what your Flask application is doing, but assuming it’s loading the model, pushes it to the GPU, calculates the predictions, and forward it to the user, I don’t know what the other “python” process is doing and if it’s created by the Flask app.

My flask Application does exactly what you say.

The other python application appears when I launch the flask app (so is mine), but I d’ont know exactly wath is,… I think is the python interpreter. May be the python interpreter?

The other application is using the default python while the Flask app seems to use its own python shipped in an environment.

In any case, I’m not familiar with Flask so don’t know if it’s supposed to spawn other processes and in fact why this process is using device memory.

Ok, friend, thank you very very much for your help,…

The two processes go to the GPU when I translate the models to the GPU, so I thinbk it is necessary that they where on GPU, but I’ll research on it…