Hello all,

I have a server with 8 x GeForce GTX 1080 Ti and I am trying to implement distributed training based on this github collab https://github.com/NVIDIA/apex/blob/master/examples/imagenet/main_amp.py

This was meant for imagenet, but I made a few modifications for cifar10:

I had to eliminate this function, because I couldn’t make it work with cifar10, so I skipped it

def fast_collate(batch, memory_format):

imgs = [img[0] for img in batch]

targets = torch.tensor([target[1] for target in batch], dtype=torch.int64)

w = imgs[0].size[0]

h = imgs[0].size[1]

tensor = torch.zeros( (len(imgs), 3, h, w), dtype=torch.uint8).contiguous(memory_format=memory_format)

for i, img in enumerate(imgs):

nump_array = np.asarray(img, dtype=np.uint8)

if(nump_array.ndim < 3):

nump_array = np.expand_dims(nump_array, axis=-1)

nump_array = np.rollaxis(nump_array, 2)

tensor[i] += torch.from_numpy(nump_array)

return tensor, targets

I changed the model to Efficient Net from https://www.kaggle.com/hmendonca/efficientnet-cifar-10-ignite

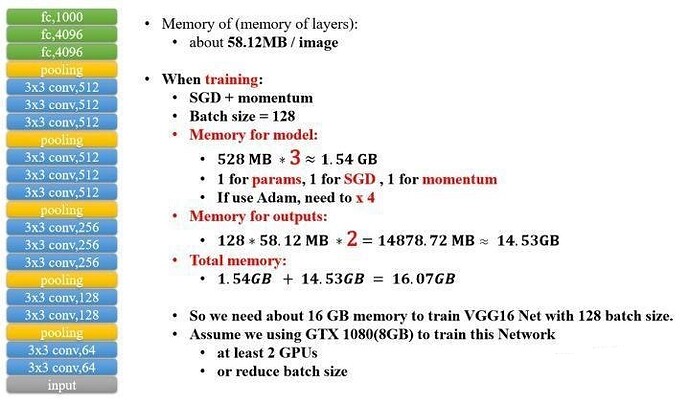

Batch size is 125 and Image size 224. It is not something excessive for 8 GPUs

I train and I get this error on ALL GPUs:

RuntimeError: CUDA out of memory. Tried to allocate 64.00 MiB (GPU 0; 10.92 GiB total capacity; 10.33 GiB already allocated; 43.50 MiB free; 43.60 MiB cached)

I believe batch size should be divided between the 8 GPUs and if that is right why they’re overflowing or how can I fix it?..I don’t see the advantage of using apex if I am still getting this kind of errors.

Thanks in advance

Which opt_level are you using?amp? If not, do you know the memory requirement for this particular setup?

currently I am using opt_level 3

I started with one GPU no distributed and I could only train with

image size= 112 (half of 224)

batch_size = about 60 (half of what I have)

but now I want to train with 224 and bs 125 and I can’t be possible that I can’t run that with 8 gpus.

I have another server with two Tesla V100 with 32GB each one and actually is running right now. It is weird because I specified batch size to be 125 and the epoch shows 200

python -m torch.distributed.launch --nproc_per_node=2 main_amp_cifar10.py -a resnet50 --b 125 --workers 4 --opt-level O3 ./

Epoch: [2][33/200] Time 0.048 (0.050) Speed 5225.336 (5015.339) Loss 0.8833006620 (0.9562) Prec@1 69.200 (66.533) Prec@5 96.800 (96.921)

So, in conclusion

it doesn’t run with 8 GTX 1080 Ti 12gb

It runs with two Tesla V100-PCIE 32gb

Lornatang

February 4, 2020, 4:30am

4

Hi, I happen to be using the Efficient model, so let me answer that question

efficientnet-b0 parms is 5.3M.https://github.com/Lornatang/EfficientNet/blob/master/README.md

1 Like

Thanks…that’s why I was using 8 gpus but still I wasn’t able to run it.