When i search, cuda programming, all the programming techniques are in C language, i want in python and pytorch framework, where do i get, please help me.

Could you describe your use case in more detail please?

In case you want to use a Python CUDA frontend, you might be able to hook it into PyTorch by writing a custom autograd.Function as described here.

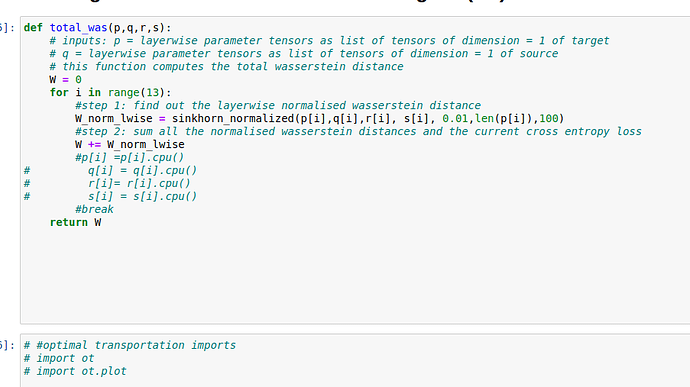

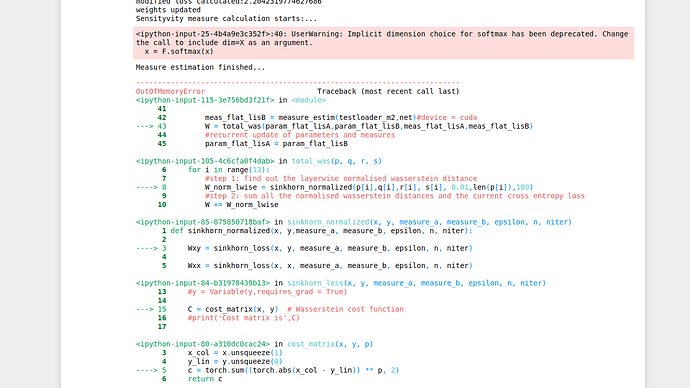

Thank you for the reply!, I have a for loop that handle 18 lakh float parameters, i need to know how to handle these much parameters at a time so that i can avoid “out of memory” issue. the function given in the screen shot is been called in a loop and it handles a lot of parameters at a time. this is my issue.how to manage the memory?

I’m unsure how the screenshot showing PyTorch code is related to the original question.

In any case, you might need to decrease the batch size or the number of iterations to save memory.

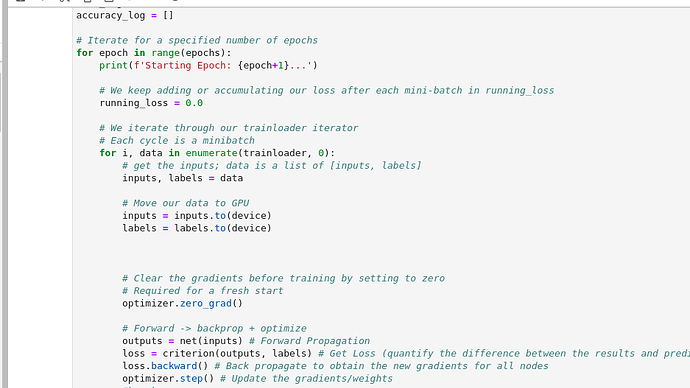

Ok… i can explain, look this training loop shown, i have moved my inputs and labels to GPU by .to(device) command, as the inputs and labels needed only to the point of loss calculation, i need to simply erase the memmory allocated in the GPU for inputs and labels. so that i can utilise the same memory or without loss of memory for the next batch