for _, (product, target_reactants) in enumerate(tqdm(self.train_loader, desc="Epoch progress", unit=" batches", leave=False)):

product, target_reactants = product.to(self.device, non_blocking=True), target_reactants.to(self.device, non_blocking=True)

predicted_reactants = self(product, target_reactants, 0.5)

target_reactants = target_reactants[1:]

vocab_size = predicted_reactants.shape[-1]

predicted_reactants = predicted_reactants.view(-1, vocab_size)

target_reactants = target_reactants.view(-1)

torch.cuda.nvtx.range_push("criterion")

loss = self.criterion(predicted_reactants, target_reactants)

torch.cuda.nvtx.range_pop()

torch.cuda.nvtx.range_push("loss.detach()")

accumlated_train_loss += loss.detach()

torch.cuda.nvtx.range_pop()

torch.cuda.nvtx.range_push("zero_grad")

self.optimizer.zero_grad()

torch.cuda.nvtx.range_pop()

torch.cuda.nvtx.range_push("loss_backward")

loss.backward()

torch.cuda.nvtx.range_pop()

torch.cuda.nvtx.range_push("clip_grad_norm")

torch.nn.utils.clip_grad_norm_(self.parameters(), 0.1)

torch.cuda.nvtx.range_pop()

torch.cuda.nvtx.range_push("optimizer_step")

self.optimizer.step()

torch.cuda.nvtx.range_pop()

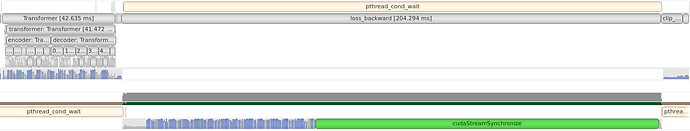

Why is there a synchronization in the loss_backward range in the profiler output? This shows up only once every 3-4 iterations.