Hello! So this is a bit of weird one…

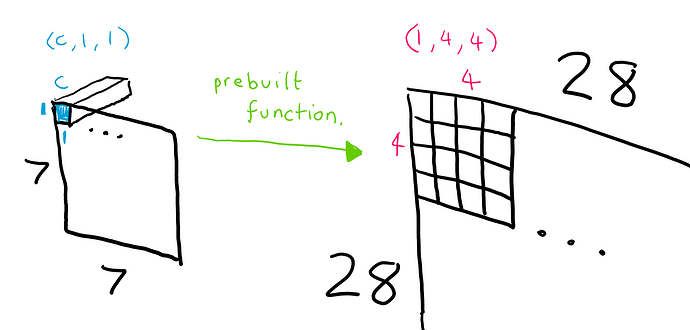

I have a (b, c, 7, 7) tensor. For each “pixel” in this tensor I am going to put the (c, 1, 1) through a pretrained decoder to produce a (1, 4, 4) pixel image. In this way I intend to turn the (batch, channel, 7, 7) into (batch, 1, 28, 28). The problem is that I can not figure out how to do this upscaling through my custom function (the pretrained decoder).

perhaps an image will help:

To be clear I already have the trained function/decoder, I can feed the pixels into that decoder one by one and get the resulting 4 by 4. But I need to figure out a way to unfold the resulting tensors back into the larger image shape. (Perhaps some sort of nested unfold.) That is what I am having trouble with. If you could help out or simply point me in the right direction I would be very grateful.

Thanks in advance!