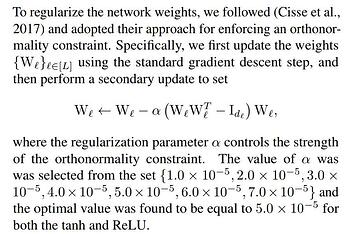

I am trying to implement the following update rule:

W_l is the l-th weight matrix. The architecture is a FFNN with 5 hidden layers with the following number of neurons from input layer X to output layer Yhat: 12-10-7-5-4-3-2. So there are 6 weight matrices for me.

I tried to implement this by doing the following in my training loop:

# Train the model

total_step = len(train_loader)

for epoch in range(num_epochs):

epoch_globalscope = epoch

if(epoch_globalscope in epochs_to_sample):

with torch.no_grad():

for x, y in test_loader:

x = x.to(device)

y = y.to(device)

outputs = model(x, sampleXTbool = True)

for i, (x, y) in enumerate(train_loader):

# Move tensors to the configured device

x = x.to(device)

y = y.to(device)

# Forward pass

outputs = model(x,sampleXTbool=False)

loss = criterion(outputs, y)

if(epoch % 200 == 0):

print("Epoch " + str(epoch) + " Batch " + str(i) + " loss " + str(loss.item()))

#determine W_l for each l

W_1 = list(model.parameters())[0] #weightmatrix W_1 between X and H1

W_2 = list(model.parameters())[2] #weightmatrix W_2 between H1 and H2

W_3 = list(model.parameters())[4] #weightmatrix W_3 between H2 and H3

W_4 = list(model.parameters())[6] #weightmatrix W_4 between H3 and H4

W_5 = list(model.parameters())[8] #weightmatrix W_5 between H4 and H5

W_6 = list(model.parameters())[10] #weightmatrix W_6 between H5 and Y_hat

# Backward and optimize

optimizer.zero_grad()

loss.backward()

W_1_update = W_1 - alpha*torch.mm(torch.mm(W_1,torch.transpose(W_1,0,1))-torch.eye(len(W_1)),W_1)

model.parameters()[0]=W_1_update

W_2_update = W_2 - alpha*torch.mm(torch.mm(W_2,torch.transpose(W_2,0,1))-torch.eye(len(W_2)),W_2)

model.parameters()[2]=W_2_update

W_3_update = W_3 - alpha*torch.mm(torch.mm(W_3,torch.transpose(W_3,0,1))-torch.eye(len(W_3)),W_3)

model.parameters()[4]=W_3_update

W_4_update = W_4 - alpha*torch.mm(torch.mm(W_4,torch.transpose(W_4,0,1))-torch.eye(len(W_4)),W_4)

model.parameters()[6]=W_4_update

W_5_update = W_5 - alpha*torch.mm(torch.mm(W_5,torch.transpose(W_5,0,1))-torch.eye(len(W_5)),W_5)

model.parameters()[8]=W_5_update

W_6_update = W_6 - alpha*torch.mm(torch.mm(W_6,torch.transpose(W_6,0,1))-torch.eye(len(W_6)),W_6)

model.parameters()[8]=W_6_update

optimizer.step()

I have two problems:

- the W_l_update lines of code cause the following error: “expected device cuda:0 but got device cpu”

When I fix this by just training on cpu, I get the following error: - the assignment of W_l_update tot model.parameters()[index] give the error: “‘generator’ object does not support item assignment”

How to go about fixing these 2 problems?