Hi,

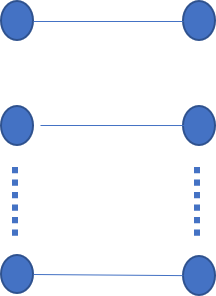

I want to custom a layer with the following structure:

Here is the code for the customer layer:

class MaskedLinear(nn.Module):

def __init__(self, in_dim, out_dim):

super(MaskedLinear, self).__init__()

self.weight = nn.Parameter(torch.randn([in_dim, out_dim]))

# print(f'0 weight is {self.weight}')

self.bias = nn.Parameter(torch.randn([1, out_dim]))

# print(f'0 bias is {self.bias}')

self.mask = torch.eye(out_dim) # create mask

# print(f'mask is {self.mask}')

def forward(self, input):

self.weight = nn.Parameter(self.weight * self.mask)

# print(f'1 weight is {self.weight}')

return torch.matmul(input, self.weight) + self.bias

I got a problem. I check the weights after backpropagation. The weights are not updated and always be the initial value. I don’t know what the reason is. Any suggestions?