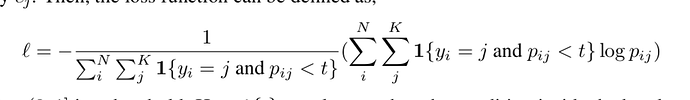

I am trying to implement a customized loss function in pytorch based on the formula below.

def TopKLoss(pred, target, top_k=0.7):

pred = F.log_softmax(pred)

n = pred.size(0)

c = pred.size(1)

out_size = (n,) + pred.size()[2:]

if target.size()[1:] != pred.size()[2:]:

raise ValueError('Expected target size {}, got {}'.format(

out_size, target.size()))

pred = pred.contiguous()

target = target.contiguous()

for batch_no in range(n):

for class_no in range(c):

print(pred[batch_no][class_no])

print(target[batch_no]==class_no)

pixel_sum = (torch.numel(pred[batch_no][class_no]<top_k and target[batch_no]==class_no))

print(pixel_sum)

topk_loss+= pixel_sum

return topk_loss

This is what I have until now, but it throws an error when I try to sum over the elements

which respect the condition.

RuntimeError: bool value of Tensor with more than one value is ambiguous

Can somebody help with this?