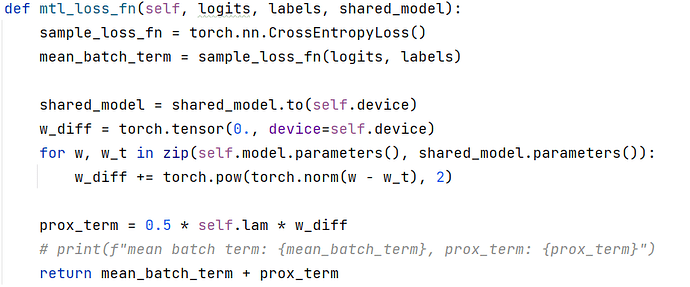

Hi. I implement a mean-regularized multi-task learning framework using a loss function like this:

While adding no noise, it works well: Average accuracy of clients increases and then decreases as lambda(the personalization degree) starts to increase from zero.

When I make clients do DP-SGD using opacus, this rise-then-fall pattern doesn’t exist. The average accuracy always stays the same as if all clients train their models completely locally, which means the prox term in loss function somehow disappears. Anyone knows if the loss function is really the problem here?

BTW, when using opacus in FedAvg setting without personalization, the code works well. I will really appreciate any thougnts.