Hi,

The problem is that when I retrieve the stored images from the custom data loader along with annotations, I am unable to draw a bounding box around the images which are stored as tensor and then retrieved as a NumPy array. Am using simple custom transforms. Below is the code of how the image is read and then what custom transforms are applied to it in my custom dataset class.

...

# Read image from the file

image = cv2.cvtColor(cv2.imread(os.path.join(self.__root_dir, image_desc["file_name"])),

cv2.COLOR_BGR2RGB)

...

CUSTOM_TRANSFORMS = transforms.Compose([transforms.ToTensor()])

My custom data loader is working fine. And my custom dataset is also working fine. Below is the test code of the custom data loader and its output. (Batch size is equal to 1 for simplicity)

Code

for i, (images, annotations) in enumerate(train_dataloader):

print(type(image[0]), annotations[0])

Output

<class 'numpy.ndarray'> {'image_id': tensor([0]), 'boxes': tensor([[140., 188., 306., 293.]]), 'area': tensor([17430.]), 'iscrowd': tensor([0]), 'labels': tensor([1])}

<class 'numpy.ndarray'> {'image_id': tensor([1]), 'boxes': tensor([[216., 188., 381., 358.]]), 'area': tensor([28050.]), 'iscrowd': tensor([0]), 'labels': tensor([1])}

<class 'numpy.ndarray'> {'image_id': tensor([2]), 'boxes': tensor([[ 78., 257., 330., 433.]]), 'area': tensor([44352.]), 'iscrowd': tensor([0]), 'labels': tensor([1])}

<class 'numpy.ndarray'> {'image_id': tensor([3]), 'boxes': tensor([[ 59., 113., 325., 542.]]), 'area': tensor([114114.]), 'iscrowd': tensor([0]), 'labels': tensor([1])}

<class 'numpy.ndarray'> {'image_id': tensor([4]), 'boxes': tensor([[113., 153., 439., 382.]]), 'area': tensor([74654.]), 'iscrowd': tensor([0]), 'labels': tensor([1])}

<class 'numpy.ndarray'> {'image_id': tensor([5]), 'boxes': tensor([[144., 78., 487., 342.]]), 'area': tensor([90552.]), 'iscrowd': tensor([0]), 'labels': tensor([1])}

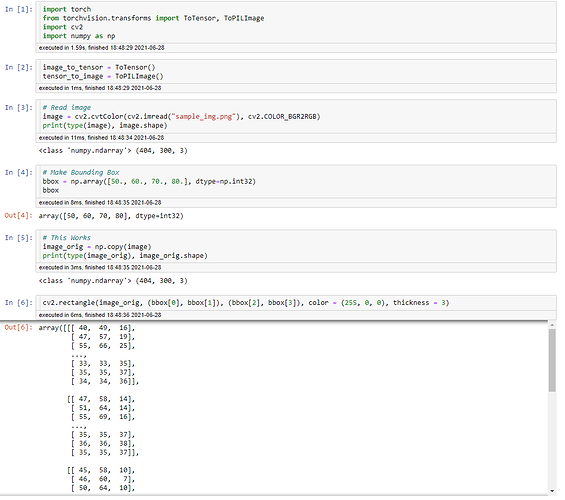

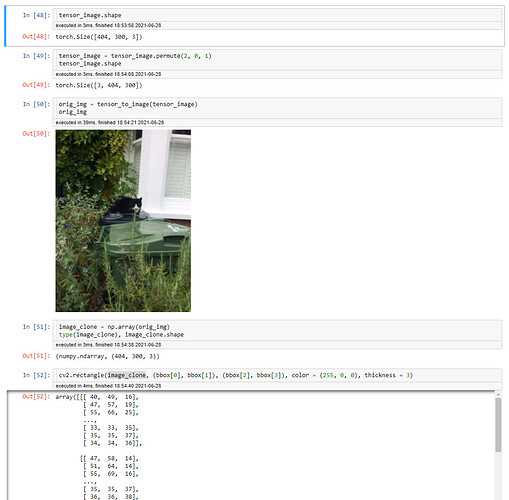

Below code is an explanation of what is happening in my case.

Code

# Read image

image = cv2.cvtColor(cv2.imread("/content/dataset/train/img_0.jpg"), cv2.COLOR_BGR2RGB)

print(type(image))

# Make Bounding Box

bbox = np.array([140., 188., 306., 293.], dtype=np.int32)

# This Works

image_orig = np.copy(image)

print(type(image_orig), image_orig.shape)

cv2.rectangle(image_orig, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color = (255, 0, 0), thickness = 3)

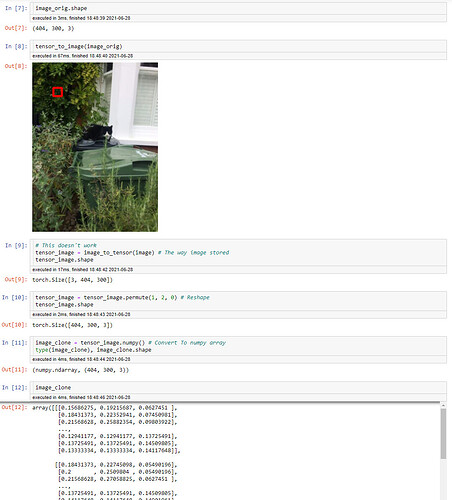

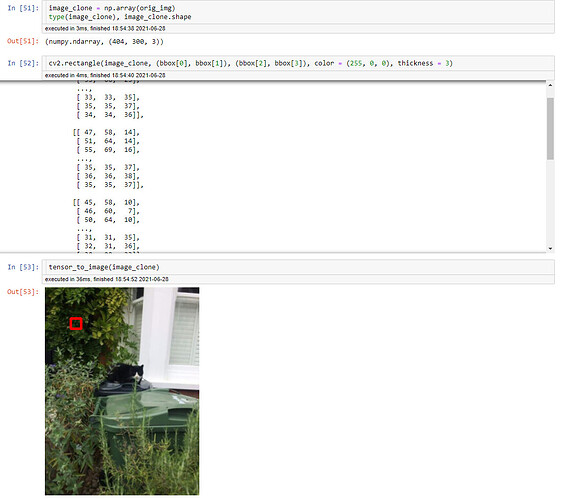

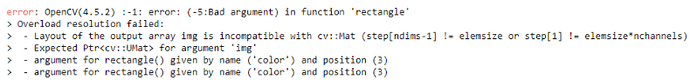

# This doesn't work

tensor_image = CUSTOM_TRANSFORMS(image) # The way image stored

tensor_image = tensor_image.permute(1, 2, 0) # Reshape

image_clone = tensor_image.numpy() # Convert To numpy array

print(type(image_clone), image_clone.shape)

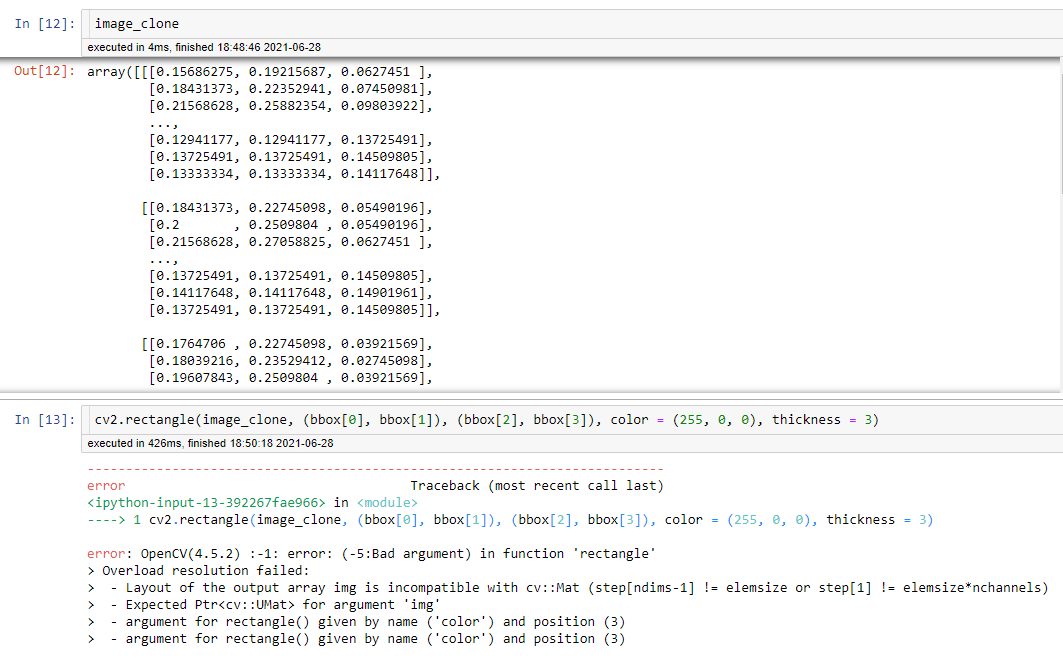

cv2.rectangle(image_clone, (bbox[0], bbox[1]), (bbox[2], bbox[3]), color = (255, 0, 0), thickness = 3)

# This works for both image_orig and image_clone

for i in range(bbox[1], bbox[3]):

for j in range(bbox[0], bbox[2]):

image_orig[i, j, 1] = 255

image_clone[i, j, 1] = 255

# Plot

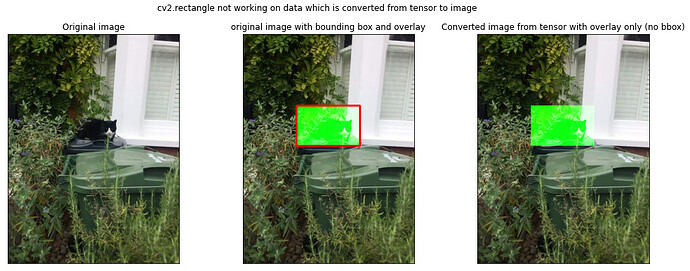

fig = plt.figure(figsize=(18, 6), facecolor="white")

plt.subplot(131)

plt.title("Original image")

plt.xticks([])

plt.yticks([])

plt.imshow(image)

plt.subplot(132)

plt.title("original image with bounding box and overlay")

plt.xticks([])

plt.yticks([])

plt.imshow(image_orig)

plt.subplot(133)

plt.title("Converted image from tensor with overlay only (no bbox)")

plt.xticks([])

plt.yticks([])

plt.imshow(image_clone)

plt.suptitle("cv2.rectangle not working on data which is converted from tensor to image")

plt.subplots_adjust(wspace=0)

plt.show()

Output

Clipping input data to the valid range for imshow with RGB data ([0..1] for floats or [0..255] for integers).

<class 'numpy.ndarray'>

<class 'numpy.ndarray'> (600, 450, 3)

<class 'numpy.ndarray'> (600, 450, 3)

Please help me to understand what am doing wrong here.

Regards,

Sunny.