Hello! I am a bit confused about using torch.optim.lr_scheduler.CyclicLR. I see that by default it has 2000 steps up and 2000 down. This means (if i understand it right), that for this built-in case I need 4000 iterations in my code. So if I have more or less then that, I have to adjust the numbers by hand, such that the sum of steps up + steps down = number of iterations, right? Also, what happens if I keep it like this but I have more than 4000 iterations, do I get an error or the rest of the code is ran with the final value of the LR i.e. the smallest one. Then, I know that in the paper that introduced the cyclical LR, after the down step, the LR was going even further down by few order of magnitude, for something like 10% of the total number of iterations. How can I set this parameter? I see no parameter for this percentage? Thank you!

You will not get any error if you don’t keep steps up + steps down = number of iterations. Paper suggest to do this (step_size = 2*iterations) to get good results. The answer to second question is , if you use mode = 'triangular2' your ampltiude decreases by half evey time. You can also use exp_range.

You can read more this here.

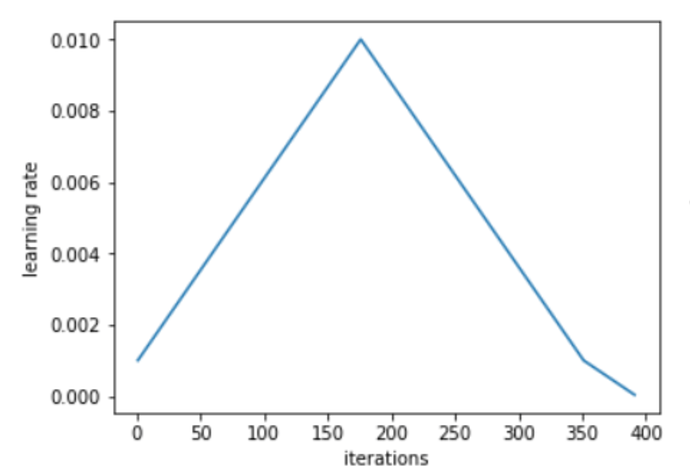

Thank you! For the second question, I was actually wondering if I can do something like this:

So after the “normal” cycle is over, the LR gets lower by a few orders of magnitude for a few more iterations.

what happens after getting lowered ? , it will start new cycle ?

No, it stops. It’s the one cycle policy from Leslie Smith’s paper. The plot shows the evolution of the LR over the whole training

I am sorry but I don’t know how to implement this in pytorch.