Hello. I have images dataset of ECG Signal which has 6 classes but the classes are imbalanced. Now I wanna use data augmentation on my dataset to balance the classes. You know ECG Signal needs to be augmented to have a benefit so I do not see it benefiting by croping, rotating etc so Im doing scaling, translation. My goal is these two techniques. I have read about this in pytorch and came to know that transforms.affine has attributes that can perform scaling and translation.

Now my query is when I apply it like:

from torchvision import transforms as T, datasets

import os

import random

Define the data augmentation transformations

train_transform = T.Compose([

T.RandomApply([T.RandomAffine(degrees=0, translate=(0.1, 0), scale=(0.9, 1.1))], p=0.5),

T.Resize(size=(CFG.img_size,CFG.img_size)),

T.ToTensor(), # convert the dimension from (height,weight,channel) to (channel,height,weight) convention of PyTorch

T.Grayscale(1)

])

Define the validation and test transformations

validate_transform = T.Compose([

T.RandomApply([T.RandomAffine(degrees=0, translate=(0.1, 0), scale=(0.9, 1.1))], p=0.5),

T.Resize(size=(CFG.img_size,CFG.img_size)),

T.ToTensor(),

T.Grayscale(1)

])

test_transform = T.Compose([

T.RandomApply([T.RandomAffine(degrees=0, translate=(0.1, 0), scale=(0.9, 1.1))], p=0.5),

T.Resize(size=(CFG.img_size,CFG.img_size)),

T.ToTensor(),

T.Grayscale(1)

])

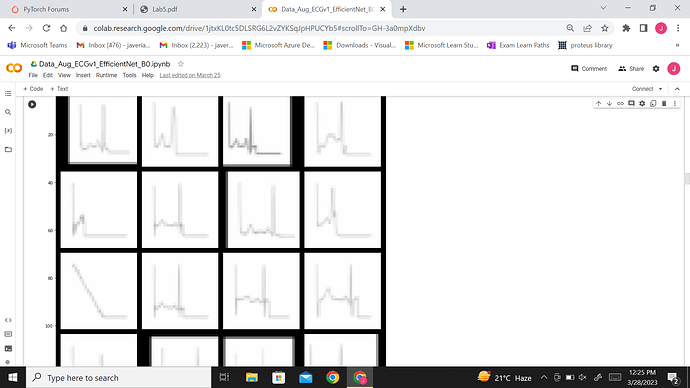

But my images looked like this :

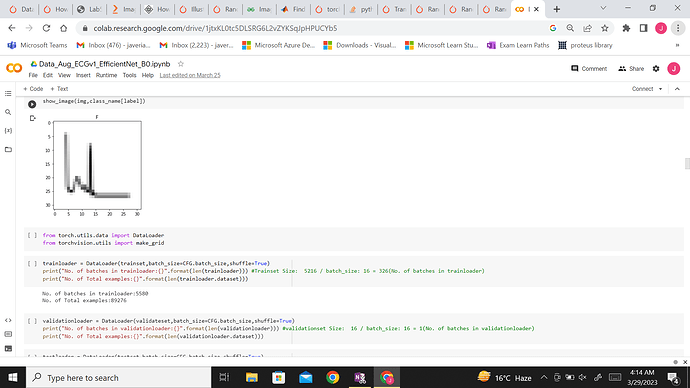

by running the code: dataiter = iter(trainloader)

images,labels = next(dataiter)

out = make_grid(images,nrow=4)

show_grid(out,title = [class_name for x in labels])

Now my problem is how can I fix it? why it is happening ? besides as I said i need class balance but how will I ensure that classes got balanced. ? I have say 9000 images in train 1000 in validation and 4000 in test. i need to see my dataset getting large by balancing classes by introducing translation or scaling.

I tried this code chunk but as PIL needs rgb image I get error I have to work with grayscale Images through out for my application.

from PIL import Image

Define the number of images you want per class

num_images_per_class = 2200

going to be used for loading dataset

train_path=‘/content/train’

validate_path= ‘/content/val’

test_path=‘/content/ECG_Image_data/test’

Loop through the train, val, and test folders

for folder_path in [train_path,validate_path,test_path]:

# Loop through each sub-folder (class) in the current folder

for class_folder in os.listdir(folder_path):

class_path = os.path.join(folder_path, class_folder)

# Count the number of images in the current class

num_images = len(os.listdir(class_path))

# Calculate the number of images to generate

num_images_to_generate = num_images_per_class - num_images

# Generate new images by applying the transform to existing images

for i in range(num_images_to_generate):

# Choose a random image from the class folder

image_path = os.path.join(class_path, random.choice(os.listdir(class_path)))

# Load the image

image = Image.open(image_path)

# Apply the transform

transformed_image = train_transform(image)

# Save the transformed image with a new name

new_image_name = f"{class_folder}transformed{i}.png"

transformed_image.save(os.path.join(class_path, new_image_name))

Plz help me solve this. I can’t fix it.