When I load the data set using torch.utils.data.DataLoader it runs infinitely without output like here

try:

for i, (images, targets) in enumerate(data_loader_train):

print(f"Batch {i}:")

print("Images shape:", images.shape)

print("Targets:", targets)

if i == 2: # Stop after inspecting a few batches

break

except Exception as e:

print("An error occurred:", e)

this is my class dataset

class Dataset(torch.utils.data.Dataset):

def __init__(self, grouped_df, df, image_folder, processor, model, device):

self.df = df

self.image_folder = image_folder

self.grouped_df = grouped_df

self.processor = processor

self.model = model

self.device = device

formatted_array = []

for num in df["imageid"].unique():

num_str = str(num)

if len(num_str) == 1:

formatted_array.append("00" + num_str)

elif len(num_str) == 2:

formatted_array.append("0" + num_str)

else:

formatted_array.append(num_str)

self.image_ids = formatted_array

self.classes = [_, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10]

self.masks = []

for idx in range(len(self.image_ids)):

image_name = self.image_ids[idx]

image_path = os.path.join(self.image_folder, image_name + '.jpg')

image = Image.open(image_path)

resized_image = image.resize((350, 350))

resized_array = np.array(resized_image).astype(np.uint8)

input_boxes = [self.grouped_df["resized_bbox"][idx]]

# SAM to find mask

inputs = self.processor(resized_array,input_boxes=input_boxes ,return_tensors="pt").to(self.device)

image_embeddings = self.model.get_image_embeddings(inputs["pixel_values"])

inputs.pop("pixel_values", None)

inputs.update({"image_embeddings": image_embeddings})

with torch.no_grad():

outputs = self.model(**inputs, multimask_output=False)

masks = self.processor.image_processor.post_process_masks(outputs.pred_masks.cpu(), inputs["original_sizes"].cpu(), inputs["reshaped_input_sizes"].cpu())

masks = torch.as_tensor(np.array(list(map(np.array, masks)), dtype=np.uint8))

masks = masks.squeeze(dim=0).squeeze(dim=1)

self.masks.append(masks)

def __getitem__(self, idx):

image_name = self.image_ids[idx]

image_path = os.path.join(self.image_folder, image_name + '.jpg')

image = Image.open(image_path)

image_id=idx

# Resize image

resized_image = image.resize((350, 350))

image = np.array(resized_image).astype(np.uint8)

image_normalized = image / 255.0

# Get classes

labels = torch.as_tensor(self.grouped_df["classid"][idx], dtype=torch.int64)

# Get boxes

boxes = torch.as_tensor(self.grouped_df["resized_bbox"][idx], dtype=torch.float32)

# Calculate the area

area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])

# Get mask

masks = self.masks[idx]

# Suppose all instances are not crowd

iscrowd = torch.zeros((boxes.shape[0],), dtype=torch.int64)

# Create target

target = {}

target["iscrowd"] = iscrowd

target['boxes'] = boxes

target["labels"] = labels

target["masks"] = masks

target["area"] = area

target["image_id"] = image_id

return transforms.ToTensor()(image_normalized), target

def __len__(self):

return len(self.image_ids)

then split and call utils.data.DataLoader

torch.manual_seed(1)

indices = torch.randperm(len(dataset)).tolist()

test_split = 0.2

size = int(len(dataset)*test_split)

dataset_train = torch.utils.data.Subset(dataset, indices[:-size])

dataset_test = torch.utils.data.Subset(dataset, indices[-size:])

data_loader_train = torch.utils.data.DataLoader(

dataset_train, batch_size=4, shuffle=True, num_workers=8,

collate_fn=utils.collate_fn)

data_loader_test = torch.utils.data.DataLoader(

dataset_test, batch_size=4, shuffle=False, num_workers=8,

collate_fn=utils.collate_fn)

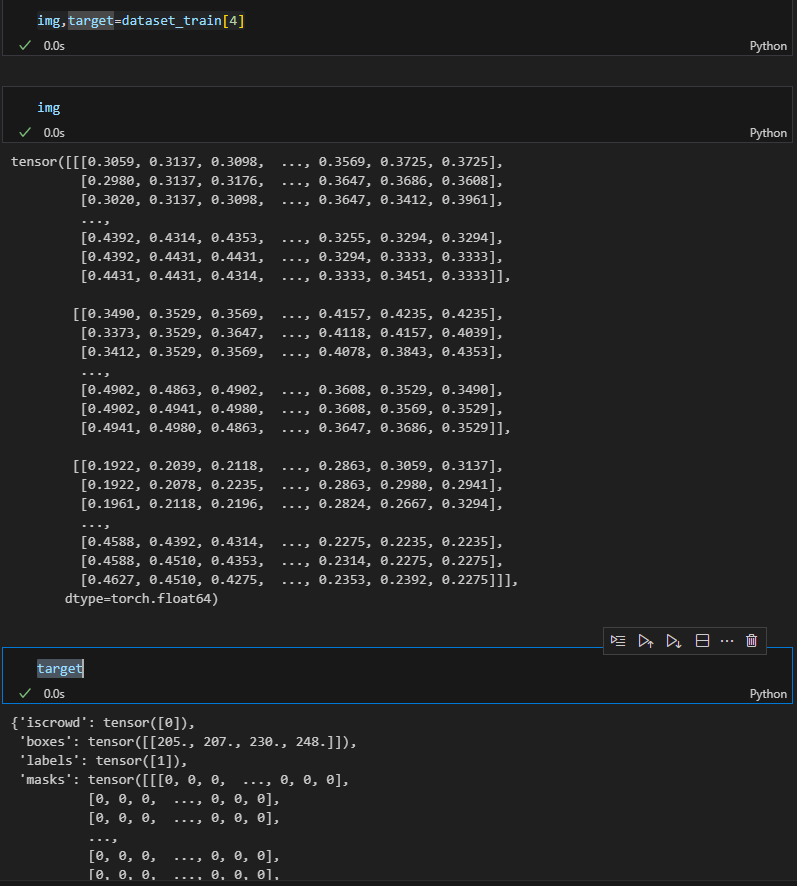

and this is the output