Hey,

I want to make a stateful lstm.

I set the Dataloader with batch size of 10000 but when I am going to initialize the hidden and cell stat it says that the batch size should be 5000.

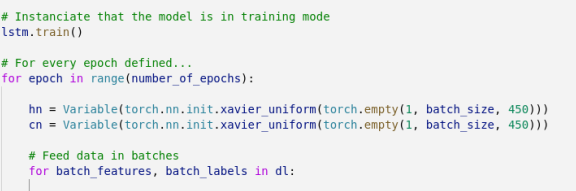

Here it is my DataLoader

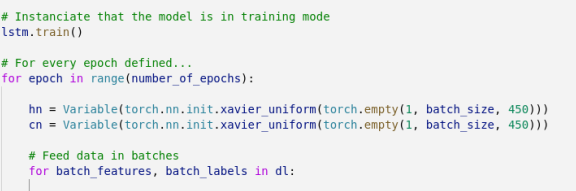

To initialize the states I only do it before a new epoch starts, like in the photo below:

I need help to figure out the dataloader batch size problem since in the initialization of the states I need to put the batch size!

Thanks for the help!

Regards

André

Hello Andre, do you have a particular reason why you want such a gigantic batch size? Most training pipelines I’ve seen have a batch size between 8 and 128. You may have a very good reason for setting it so high, but just wanted to explicitly check that, since it seems very different from what other practitioners tend to do.

Hey,

I have about 89 million of data in my dataset so I thought that batch size would be a good choice. If I am wrong explain to me, please?

Moreover, I wanted to do a stateful LSTM, where should i put the initialization of the cell and hidden states?

Thanks in advance,

Regards

André

Hi, I’d recommend you start with a batch size that’s close to what others have used, maybe on the high end of that (say, 64 or 128). In practice, training with too large a batch size is said to not generalize well to new data (overfitting). I think there can also be floating point issues. Here’s an experiment where they show that, at least in their setup, large batch sizes do poorly.

Regarding the stateful LSTM, I’m not sure if there’s a direct way to do that in PyTorch, but there are other threads such as this one that discuss how to accomplish the same thing.

From the study you pointed out, it is true that large batch sizes perform poorly, however with the amount of data I have with a batch o 128 it will take very much time to train.

You can have your DataLoader pass something less than the entire dataset per epoch, and see if you see good performance regardless. In other words, your model might train well even without seeing the full dataset each epoch.

1 Like