Hi there,

I am working with the cityscapes dataset and want to use a DataLoader with several workers to speed up the training process, but with num_workers>0 only two CPU cores are used.

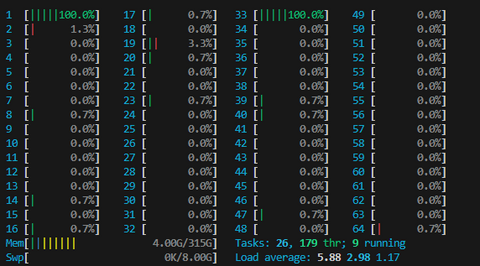

E.g. with num_workers=8, only 2 cores are used:

While trying to solve the problem I found this discussion and noticed that when I add

os.system("taskset -p 0xffffffffffffffff %d" % os.getpid())

at the beginning of my Datasets get_item function, the number of used cores equals num_workers and I notice a considerable speedup.

It seems like the affinity masks are changed in each worker’s first iteration and then stay unchanged:

pid 1280270's current affinity mask: 100000001

pid 1280270's new affinity mask: ffffffffffffffff

pid 1280333's current affinity mask: 100000001

pid 1280333's new affinity mask: ffffffffffffffff

pid 1280459's current affinity mask: 100000001

pid 1280459's new affinity mask: ffffffffffffffff

pid 1280396's current affinity mask: 100000001

pid 1280396's new affinity mask: ffffffffffffffff

pid 1280270's current affinity mask: ffffffffffffffff

pid 1280270's new affinity mask: ffffffffffffffff

pid 1280396's current affinity mask: ffffffffffffffff

pid 1280396's new affinity mask: ffffffffffffffff

pid 1280270's current affinity mask: ffffffffffffffff

pid 1280270's new affinity mask: ffffffffffffffff

pid 1280396's current affinity mask: ffffffffffffffff

pid 1280396's new affinity mask: ffffffffffffffff

pid 1280333's current affinity mask: ffffffffffffffff

pid 1280333's new affinity mask: ffffffffffffffff

pid 1280270's current affinity mask: ffffffffffffffff

pid 1280270's new affinity mask: ffffffffffffffff

pid 1280396's current affinity mask: ffffffffffffffff

pid 1280396's new affinity mask: ffffffffffffffff

I don’t have much experience with multiprocessing, but this behavior seems quite strange to me and I think there should be a better solution to solve the problem.

Does anyone have an idea what the problem could be and how it could be solved?