Hello everyone,

I am working on an model which uses custom-datasets and dataloaders.

I use PyTorch + PyTorch-Lightning. The problem is, that in the pl.LightningDataModule the DataLoader which I created does not drop the last batch (which is smaller than the others).

Here is my code:

def setup(self, stage: Optional[str] = None):

if stage == 'fit':

self.train_dataset = LSTMDataset(

data=self.train_data,

targets=self.train_targets,

seq_len=self.seq_len,

target_seq_len=self.target_seq_len,

step_size=self.step_size,

forecast_horizon=self.forecast_horizon,

transform=self.transform,

target_transform=self.target_transform)

train_length = len(self.train_dataset)

val_length = int(round(train_length * self.val_split))

self.train_dataset, self.val_dataset = random_split(self.train_dataset, [train_length - val_length, val_length])

self.train_loader = torch.utils.data.DataLoader(

dataset=self.train_dataset,

batch_size=self.batch_size,

shuffle=self.shuffle,

drop_last=True,

pin_memory=True

)

self.val_loader = torch.utils.data.DataLoader(

dataset=self.val_dataset,

batch_size=self.batch_size,

shuffle=self.shuffle,

drop_last=True,

pin_memory=True

)

elif stage == 'test':

self.test_dataset = LSTMDataset(

data=self.test_data,

targets=self.test_targets,

seq_len=self.seq_len,

target_seq_len=self.target_seq_len,

step_size=self.step_size,

forecast_horizon=self.forecast_horizon,

transform=self.transform,

target_transform=self.target_transform

)

self.test_loader = torch.utils.data.DataLoader(

dataset=self.test_dataset,

batch_size=self.batch_size,

shuffle=self.shuffle,

drop_last=True,

pin_memory=True

)

elif stage == 'predict':

self.predict_dataset = LSTMDataset(

data=self.test_data,

targets=self.test_targets,

seq_len=self.seq_len,

target_seq_len=self.target_seq_len,

step_size=self.step_size,

forecast_horizon=self.forecast_horizon,

transform=self.transform,

target_transform=self.target_transform

)

self.predict_loader = torch.utils.data.DataLoader(

dataset=self.predict_dataset,

batch_size=self.batch_size,

shuffle=self.shuffle,

drop_last=True,

pin_memory=True

)

def train_dataloader(self):

return self.train_loader

def val_dataloader(self):

return self.val_loader

def test_dataloader(self):

return self.test_loader

def predict_dataloader(self):

return self.predict_loader

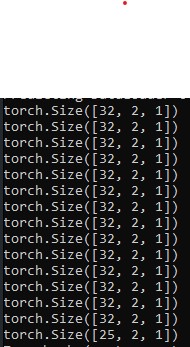

This is an extract of the code. I do not really the error… After debugging the console also shows the following with batch_size: 32

The shape-prints are generated in the prediction-step. So we have to look at the prediction-loader.

If you have any idea for an code-change or how to fix the bug, i would be happy about an answer ![]()

Best regards,

~ Linus