Hi all,

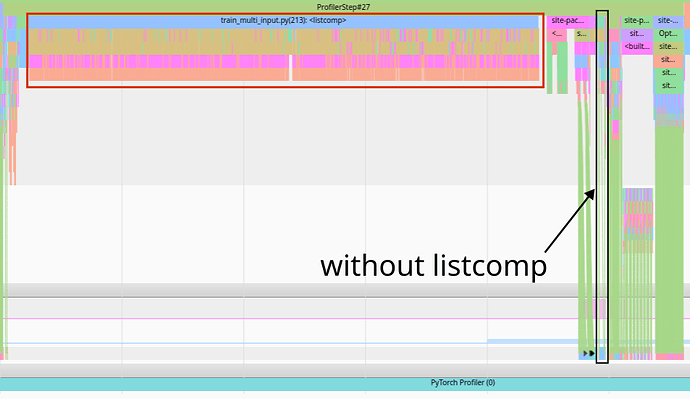

I’ve been noticing severe CPU bottlenecks in my code, where CPU utilization is almost always ~100% while GPU is consistently underutilized. I tried to send all data into GPU in the first place, and the code runs fine but didn’t give any noticeable speed boost, and autograd profiler shows only a 15% decrease in to(device) calls. Afterwards, I noticed that the total size of my data set is significantly larger than available memory on my current card (~34GB of data on a Tesla K80). So I’m wondering if DataLoader is doing some implicit calls moving data from CPU to GPU or vice versa. Another thing that I thought might be the problem is that two elements in collate_fn isn’t moved to GPU (evt_ids, evt_names), so DataLoader has a mix of GPU/CPU elements. Would this make a difference?

def collate_fn(samples):

X = [s[0] for s in samples]

y = [s[1] for s in samples]

w = [s[2] for s in samples]

evt_ids = [s[3] for s in samples]

evt_names = [s[4] for s in samples]

# custom function padding different sized inputs

X, adj_mask, batch_nb_nodes = pad_batch(X)

device = torch.device('cuda')

X = torch.FloatTensor(X).to(device)

y = torch.FloatTensor(y).to(device)

w = torch.FloatTensor(w).to(device)

adj_mask = torch.FloatTensor(adj_mask).to(device)

batch_nb_nodes = torch.FloatTensor(batch_nb_nodes).to(device)

return X, y, w, adj_mask, batch_nb_nodes, evt_ids, evt_names

# Loading in multiple files and switch every "epoch"

multi_train_loader = []

for file in args.train_file:

loader = DataLoader(

dataset=file,

args.nb_train,

batch_size=args.batch_size,

shuffle=True,

collate_fn=collate_fn,

drop_last=True)

multi_train_loader.append(train_loader)

for i in range(args.nb_epoch):

train_loader = multi_train_loader[i % len(multi_train_loader)]

for i, batch in enumerate(train_loader):

X, y, w, adj_mask, batch_nb_nodes, _, _ = batch