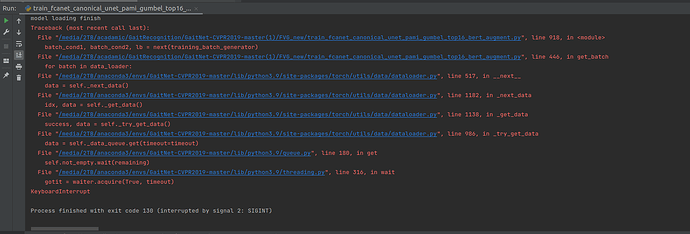

When I increase my training set from 226 to 998, the dataloader locked and the program just stay there. When I click stop button, the ternimal shows that the code stop here:

I try to change num-workers = 0 but it doesn’t work. I can’t reduce the batch_size because that will impare the performance of my model.