I’m trying to add random scaling augmentation to my training loop. I tried to do this by adding a member function that selects a random scaling factor on each iteration so that all the images in the batch are changed at the same scale, as to keep the dimensions all the same for that batch. However when using the debugger, I notice that the sizes aren’t actually changing. If I set a breakpoint in the resample function, it changes correctly, but when setting breakpoints in the transformation function, it is always at the original scale.

In self._training_loader.dataset.resample_scale(), am I just calling a copy of the class as such I am not actually modifying the actual one used in the training loop?

def resample_scale(self, reset=False):

if hasattr(self, 'scale_range') and not reset:

scale = random.uniform(*self.scale_range)

scale_func = lambda x: int(scale * x / 32.0) * 32

self.output_shape = [scale_func(x) for x in self.base_size]

else:

self.output_shape = self.base_size

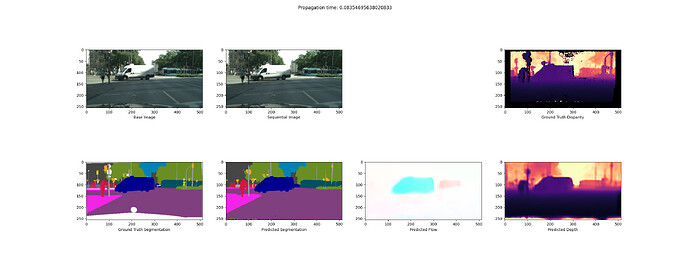

### snippet from syncrhonised transforms

for key, data in epoch_data.items():

if key in ["l_img", "r_img", "l_seq", "r_seq"]:

data = data.resize(self.output_shape, Image.BILINEAR)

epoch_data[key] = torchvision.transforms.functional.to_tensor(data)

elif key == "seg":

data = data.resize(self.output_shape, Image.NEAREST)

epoch_data[key] = self._seg_transform(data)

elif key in ["l_disp", "r_disp"]:

data = data.resize(self.output_shape, Image.NEAREST)

epoch_data[key] = self._depth_transform(data)

### snippet from training loop

for batch_idx, data in enumerate(self._training_loader):

self._training_loader.dataset.resample_scale()