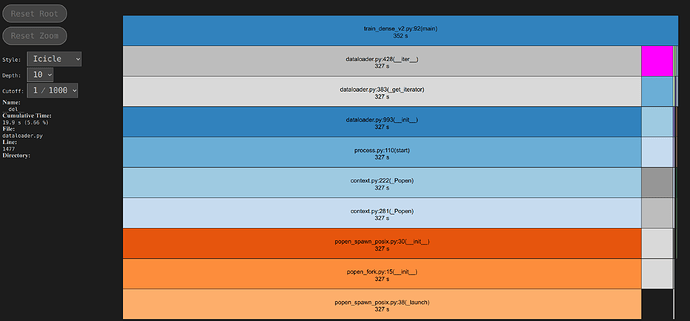

Hi, my profiler returns the following result for the training loop:

There are two problematic things:

- a method in popen_spawn_posix.py that bottlenecks training

- a

__del__method in dataloader.py that takes suspiciously long

The problem applies only when I use num_workers > 0. My data resides on an SSD drive.

Remarks about the code:

- The dataset’s

__getitem__loads a single .npy file ~3MB in size. - The dataset’s

__init__loads a single .json file ~40MB in size - dataloader:

torch.utils.data.DataLoader(

dataset,

batch_size=4,

shuffle=False,

sampler=None,

num_workers=4,

collate_fn=custom_collate_fn,

drop_last=True,

persistent_workers=True,

)

Also, having persistent_workers=True makes we wonder why the next bottleneck is __del__ from dataloader.py.

Thanks for your help.