Thanks everyone.

My dataset contains 15 million images. I have convert them into lmdb format and concat them

At first I set shuffle = False,envery iteration’s IO take no extra cost.

Inorder to improve the performance , I set it into True and use num_workers.

train_data = ConcatDataset([train_data_1,train_data_2])

train_loader = DataLoader(dataset=train_data, batch_size=64,num_workers=32, shuffle=True,pin_memory=False)

for i in range(epochs):

for j,data in enumerate(train_loader):

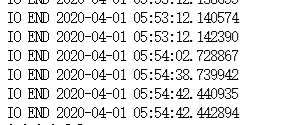

print(i,"ITER",j,"IO END",datetime.now())

continue

But IO takes too much time.

Is there something I can do to makes IO faster?