I have this code where I tested Normalize and LinearTranformation.

torchvision.transforms.Normalize and torchvision.transforms.LinearTransformation to be more precise.

import torch.nn as nn

import torch.nn.functional as F

from torch.utils.data import DataLoader, Dataset, TensorDataset

from torch.optim import *

import torchvision

trans = torch.tensor([[1/128., 1.], [1., 1.]])

dl = DataLoader(

torchvision.datasets.MNIST('/data/mnist', train=True, download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.5,), (0.5,)),

torchvision.transforms.LinearTransformation(trans)

])), shuffle=False)

tensor = dl.dataset.data

tensor = tensor.to(dtype=torch.float32)

tr = tensor.reshape(tensor.size(0), -1)

# tr = tr/128 # tr = tr/255

targets = dl.dataset.targets

targets = targets.to(dtype=torch.long)

x_train = tr[0:50000-1]

print(x_train[0])

The output print(x_train[0]) is always the same, even if I eliminate these two transformations, or if I eliminate any of these two. Do you have any idea why is this so?

By LinearTransformation I tried to do a transform that would be identical to scaling down the tensor values like tr = tr/128 for instance in the commented part of the code.

You are accessing the dataset directly. You need to access it via the DataLoader object, for the transforms to be applied.

For example, you could do:

next(iter(dl))

This should return a Tensor to which transforms have been applied.

More generally, to iterate through your dataset, do:

for i, sample in enumerate(dl):

print(sample) # Or any other processing you'd like to perform

Aside

Your usage of LinearTransformation() appears to be inconsistent with the latest API. LinearTransformation() expects a transformation_matrix and a mean_vector. It seems like you’re providing only the transformation_matrix.

1 Like

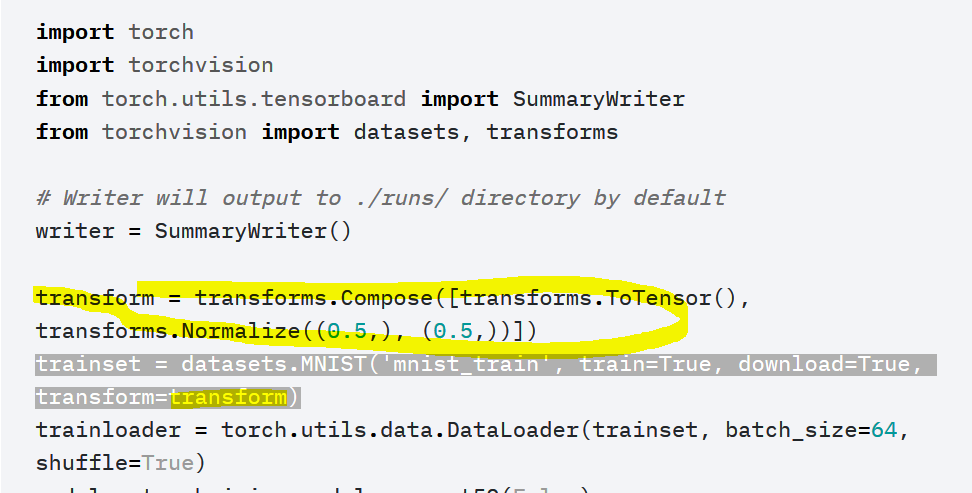

I tried to find where I could match the example from PyTorch website and I found an instance having Compose:

I am using the DataLoader already, where exactly the first parameter is a Dataset.

I provided that first parameter because torchvision.datasets.MNIST(... is a Dataset.

Yes, I tried to do that but if I recall, when I tried to set the mean, I got this error.

TypeError: init() takes 2 positional arguments but 3 were given

Seams that I cannot provide second parameter at all.

PyTorch ver 1.0.0

Does using the transform in here:

dl = DataLoader(

torchvision.datasets.MNIST('/data/mnist', train=True, download=True,

transform=torchvision.transforms.Compose([

torchvision.transforms.ToTensor(),

torchvision.transforms.Normalize((0.5,), (0.5,)),

torchvision.transforms.LinearTransformation(trans)

])), shuffle=False)

really has any sense?

Try the example and you will see nothing will be transformed. Am I missing something obvious?

Your dataloader transforms the data. What’s incorrect is that you’re accessing the train set directly, and not the output of the dataloader.

More concretely, you must not access tr[0] directly. You should rather access the dataloader output. (accessing the zeroth index is as simple as calling next(iter(dl)))

1 Like