Hi this is my dataset and codes.

In training code has tensor error and after transform has to pilimage error.

this is my code:

import pandas as pd

import torchvision.transforms as trs

from torch.utils.data import Dataset, DataLoader

from ReadData import mean, std

#Dataset

class welldata(Dataset):

def init(self, press_file=‘press.csv’, label_file=‘label.csv’):

self.press = pd.read_csv(press_file)

self.label = pd.read_csv(label_file)

self.tr = trs.Compose([

trs.Normalize((mean), (std))

trs.ToTensor()

])

def __len__(self):

return len(self.press)

def __getitem__(self, idx):

press = self.tr(self.press.iloc[idx])

label = self.tr(self.label.iloc[idx])

return press, label

well_dataloader = DataLoader(dataset= welldata(), batch_size=5, shuffle=True)

#Creat model

class model(nn.Module):

def init(self):

super(model, self).init()

self.lazy1 = nn.LazyLinear(1)

self.rnn1 = nn.RNN(2, 2, 20)

self.relu1 = nn.ReLU()

self.bifc1 = nn.Bilinear(2, 5, 5)

self.relu2 = nn.Hardtanh()

self.lstm1 = nn.LSTM(5, 5)

self.relu3 = nn.ReLU()

def forward(self, z1, z2):

y = self.lazy1(z1)

y = torch.reshape(y, (z1.size(0), 2))

y, _ = self.rnn1(y)

y = self.relu1(y)

y = self.bifc1(y, z2)

y = self.relu2(y)

y, _ = self.lstm1(y)

y = self.relu3(y)

return y

Optimizer

model = model()

optimizer = torch.optim.AdamW(model.parameters(), lr=0.1)

Loss

loss = nn.SoftMarginLoss()

#Training

for x1, x2, yt in well_dataloader:

optimizer.zero_grad()

yp = model(x1, x2)

loss_value = loss(yp, yt)

loss_value.backward()

optimizer.step()

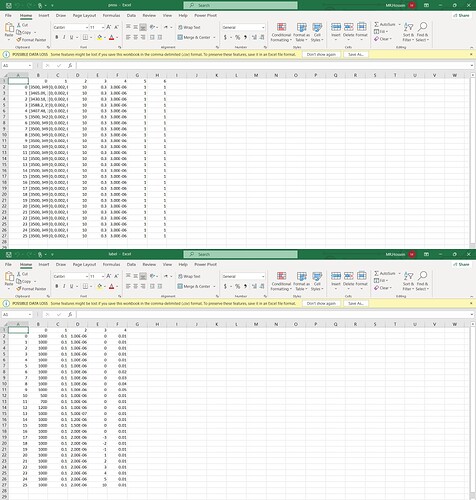

and this is my data

after debuging I’ll created data

column 0 and 1 is list and has many num float

column 0 and 1 are input data in lazylinear

and other column are input in RNN

and they are input in bilinear Network

this data are label and network must be answer

what’s the problem?