I have a custom dataset as shown below:

class MyDataset(Dataset):

def __init__(self, X, y):

self.X = X

self.y = y

def __len__(self):

return len(self.y)

def __getitem__(self, idx):

return self.X[idx], self.y[idx]

training_latent_dataset = MyDataset(

torch.cat(training_latent), torch.cat(training_labels)

)

train_latent_loader = torch.utils.data.DataLoader(

training_latent_dataset, batch_size=batch_size, shuffle=True, **kwargs

)

If the tensors are already on the GPU and I try to iterate over the Dataset, I get this error:

RuntimeError: Caught RuntimeError in DataLoader worker process 0.

Original Traceback (most recent call last):

File "/home/muammar/miniconda3/envs/py39/lib/python3.9/site-packages/torch/utils/data/_utils/worker.py", line 287, in _worker_loop

data = fetcher.fetch(index)

File "/home/muammar/miniconda3/envs/py39/lib/python3.9/site-packages/torch/utils/data/_utils/fetch.py", line 49, in fetch

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/home/muammar/miniconda3/envs/py39/lib/python3.9/site-packages/torch/utils/data/_utils/fetch.py", line 49, in <listcomp>

data = [self.dataset[idx] for idx in possibly_batched_index]

File "/tmp/ipykernel_356729/3661499099.py", line 11, in __getitem__

return self.X[idx], self.y[idx]

RuntimeError: CUDA error: initialization error

CUDA kernel errors might be asynchronously reported at some other API call,so the stacktrace below might be incorrect.

For debugging consider passing CUDA_LAUNCH_BLOCKING=1.

If I move the tensors from GPU to CPU when instantiating the MyDataset class, then everything works well:

training_latent_dataset = MyDataset(

torch.cat(training_latent).cpu(), torch.cat(training_labels).cpu()

)

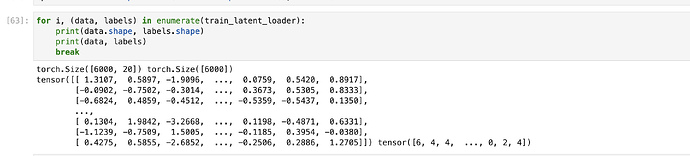

train_latent_loader = torch.utils.data.DataLoader(

training_latent_dataset, batch_size=batch_size, shuffle=True, **kwargs

)

Is that the expected behavior for the tensors to be in the CPU instead of the GPU? I could not find anything about that in the documentation.