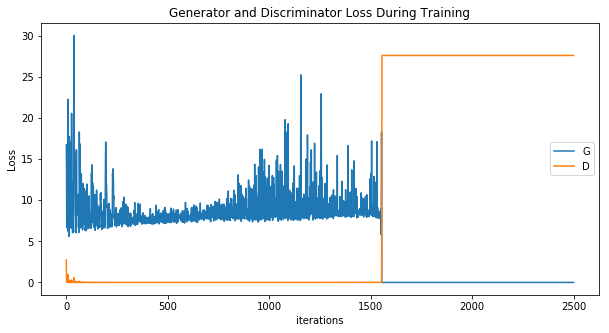

Sometimes when I am training a DC-GAN on an image dataset, similar to the DC-GAN PyTorch example (DCGAN Tutorial — PyTorch Tutorials 2.2.0+cu121 documentation), either the Generator or Discriminator will get stuck in a large value while the other goes to zero. How should I interpret what is going on right after iteration 1500 in the example loss function image shown below? Is this an example of mode collapse? Any recommendations for how to make the training more stable? I have tried reducing the learning rate of the Adam optimizer with varying degrees of success. Thanks!