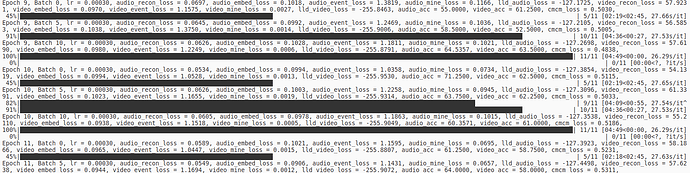

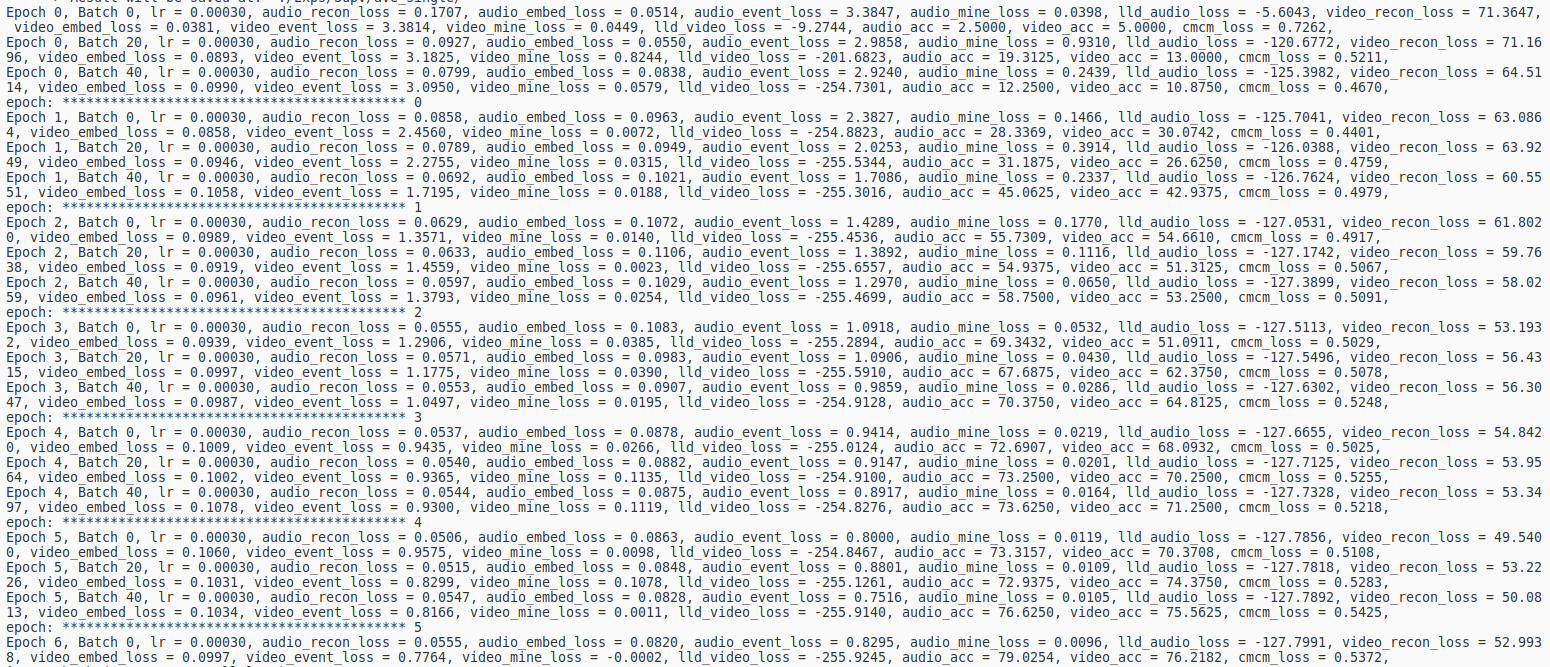

Specifically, the DDP of 3 GPUs needs 15 epochs to reach the accuracy of 5 epochs for a single GPU. It feels like the information calculated by multiple cards is not shared.

sampler.set_epoch(epoch) has settings and should not be related to my problem.

But my model involves a buffer called embedding, which is equivalent to codebook, which is updated with ema. I suspected it might be different for multicard embeddings, but I tried to use reduce_ All (), which has not improved the results.

It is worth mentioning that my model consists of four main parts, Encoder, Decoder, a_ Mi, b_ Mi. Where a_ MI and b_ Mi helps to increase mutual information between modes. I tried to put these four parts into one model for DDP, and the result is no different from setting DDP for each of the four parts.

Has anyone encountered a similar problem? I have seen a lot of similar issues in the forum and have not found a solution yet.

My English is poor, please forgive me. If you need more information, you could tell me.

Here is part of my code:

import ...

# ================================= seed config ============================

SEED = 43

random.seed(SEED)

np.random.seed(seed=SEED)

torch.manual_seed(seed=SEED)

torch.cuda.manual_seed(seed=SEED)

torch.backends.cudnn.deterministic = True

torch.backends.cudnn.benchmark = False

# =============================================================================

def init_distributed_mode():

if'RANK'in os.environ and'WORLD_SIZE'in os.environ:

args.rank = int(os.environ["RANK"])

args.world_size = int(os.environ['WORLD_SIZE'])

args.gpu = int(os.environ['LOCAL_RANK'])

elif'SLURM_PROCID'in os.environ:

args.rank = int(os.environ['SLURM_PROCID'])

args.gpu = args.rank % torch.cuda.device_count()

else:

print('Not using distributed mode')

args.distributed = False

return

args.distributed = True

torch.cuda.set_device(args.gpu)

args.dist_backend = 'nccl'

dist.init_process_group(backend='nccl', init_method=args.dist_url, world_size=args.world_size, rank=args.rank)

dist.barrier()

def main():

# utils variable

global args, logger, writer, dataset_configs

# statistics variable

global best_accuracy, best_accuracy_epoch

best_accuracy, best_accuracy_epoch = 0, 0

# configs

dataset_configs = get_and_save_args(parser)

parser.set_defaults(**dataset_configs)

args = parser.parse_args()

# select GPUs

# os.environ['CUDA_DEVICE_ORDER'] = "PCI_BUS_ID"

# os.environ['CUDA_VISIBLE_DEVICES'] = '0,1,2,3'

init_distributed_mode()

rank = args.rank

device = torch.device(args.device)

checkpoint_path=""

'''dataset selection and dataloader'''

if args.dataset_name == 'ave':

data_root = '../AVE-ECCV18-master/data'

train_data_set = AVEDataset(data_root, split='train')

val_data_set = AVEDataset(data_root, split='val')

train_sampler=torch.utils.data.distributed.DistributedSampler(train_data_set)

val_sampler=torch.utils.data.distributed.DistributedSampler(val_data_set)

# train_batch_sampler=torch.utils.data.BatchSampler(train_sampler,args.batch_size,drop_last=True)

train_dataloader = DataLoader(

train_data_set,

# DDP

# batch_sampler=train_batch_sampler,

shuffle=False,

sampler=train_sampler,

batch_size=args.batch_size,

num_workers=8,

pin_memory=True

)

val_dataloader = DataLoader(

val_data_set,

# DDP

batch_size=args.batch_size,

sampler=val_sampler,

shuffle=False,

num_workers=8,

pin_memory=True

)

else:

raise NotImplementedError

'''model setting'''

Encoder = ...

Video_mi_net = ...

Audio_mi_net = ...

Decoder = ...

'''optimizer setting'''

Encoder.to(device)

Video_mi_net.to(device)

Audio_mi_net.to(device)

Decoder.to(device)

Encoder = nn.parallel.DistributedDataParallel(Encoder, device_ids=[args.gpu])

Video_mi_net = nn.parallel.DistributedDataParallel(Video_mi_net, device_ids=[args.gpu])

Audio_mi_net = nn.parallel.DistributedDataParallel(Audio_mi_net, device_ids=[args.gpu])

Decoder = nn.parallel.DistributedDataParallel(Decoder, device_ids=[args.gpu])

optimizer = torch.optim.Adam(chain(Encoder.module.parameters(), Decoder.module.parameters()), lr=args.lr)

optimizer_video_mi_net = torch.optim.Adam(Video_mi_net.module.parameters(), lr=args.mi_lr)

optimizer_audio_mi_net = torch.optim.Adam(Audio_mi_net.module.parameters(), lr=args.mi_lr)

scheduler = MultiStepLR(optimizer, milestones=[10, 20, 30], gamma=0.5)

'''loss'''

criterion = nn.BCEWithLogitsLoss().cuda()

criterion_event = nn.CrossEntropyLoss().cuda()

'''Tensorboard and Code backup'''

writer = SummaryWriter(args.snapshot_pref)

recorder = Recorder(args.snapshot_pref, ignore_folder="Exps/")

recorder.writeopt(args)

'''Training and Evaluation'''

total_step = 0

for epoch in range(args.n_epoch):

train_dataloader.sampler.set_epoch(epoch)

loss, total_step = train_epoch(device, Encoder.module, Video_mi_net.module, Audio_mi_net.module, Decoder.module, train_dataloader, criterion, criterion_event,

optimizer, optimizer_video_mi_net, optimizer_audio_mi_net, epoch, total_step,True)

scheduler.step()

dist.destroy_process_group()

def _export_log(epoch, total_step, batch_idx, lr, loss_meter):

msg = 'Epoch {}, Batch {}, lr = {:.5f}, '.format(epoch, batch_idx, lr)

for k, v in loss_meter.items():

msg += '{} = {:.4f}, '.format(k, v)

# msg += '{:.3f} seconds/batch'.format(time_meter)

print(msg)

sys.stdout.flush()

loss_meter.update({"batch": total_step})

def to_eval(all_models):

for m in all_models:

m.eval()

def to_train(all_models):

for m in all_models:

m.train()

def train_epoch(device, Encoder, Video_mi_net, Audio_mi_net, Decoder, train_dataloader, criterion, criterion_event, optimizer, optimizer_video_mi_net, optimizer_audio_mi_net, epoch, total_step,is_train=True):

batch_time = AverageMeter()

data_time = AverageMeter()

losses = AverageMeter()

train_acc = AverageMeter()

end_time = time.time()

models = [Encoder, Video_mi_net, Audio_mi_net, Decoder]

to_train(models)

Encoder.double()

Video_mi_net.double()

Audio_mi_net.double()

Decoder.double()

Encoder.to(device)

Video_mi_net.to(device)

Audio_mi_net.to(device)

Decoder.to(device)

optimizer.zero_grad()

mi_iters = 5

if dist.get_rank() == 0:

train_dataloader = tqdm(train_dataloader)

last_n_iter = 0

for n_iter, batch_data in enumerate(train_dataloader):

last_n_iter = n_iter

data_time.update(time.time() - end_time)

'''Feed input to model'''

visual_feature, audio_feature, labels = batch_data

visual_feature.to(device)

audio_feature.to(device)

labels = labels.double().to(device)

labels_foreground = labels[:, :, :-1]

labels_BCE, labels_evn = labels_foreground.max(-1)

labels_event, _ = labels_evn.max(-1)

for i in range(mi_iters):

optimizer_video_mi_net, lld_video_loss, optimizer_audio_mi_net, lld_audio_loss = \

mi_first_forward(audio_feature, visual_feature, Encoder, Video_mi_net, Audio_mi_net, optimizer_video_mi_net, optimizer_audio_mi_net, is_train)

audio_embedding_loss, video_embedding_loss, mi_audio_loss, mi_video_loss, audio_recon_loss, video_recon_loss, \

audio_class, video_class, cmcm_loss = mi_second_forward(audio_feature, visual_feature, Encoder, Video_mi_net, Audio_mi_net, Decoder, is_train)

audio_event_loss = criterion_event(audio_class, labels_event.to(device))

video_event_loss = criterion_event(video_class, labels_event.to(device))

audio_acc = compute_accuracy_supervised(audio_class, labels)

video_acc = compute_accuracy_supervised(video_class, labels)

loss_items = {

"audio_recon_loss":audio_recon_loss.item(),

"audio_embed_loss":audio_embedding_loss.item(),

"audio_event_loss":audio_event_loss.item(),

"audio_mine_loss":mi_audio_loss.item(),

"lld_audio_loss": lld_audio_loss.item(),

"video_recon_loss":video_recon_loss.item(),

"video_embed_loss":video_embedding_loss.item(),

"video_event_loss":video_event_loss.item(),

"video_mine_loss":mi_video_loss.item(),

"lld_video_loss": lld_video_loss.item(),

"audio_acc": audio_acc.item(),

"video_acc": video_acc.item(),

"cmcm_loss": cmcm_loss.item()

}

#loss_items = {}

metricsContainer.update("loss", loss_items)

loss = audio_recon_loss + video_recon_loss + audio_embedding_loss \

+ video_embedding_loss + mi_audio_loss + mi_video_loss + audio_event_loss + video_event_loss + cmcm_loss

if n_iter % 20 == 0:

_export_log(epoch=epoch, loss_meter=metricsContainer.calculate_average("loss"))

loss.backward()

'''Clip Gradient'''

if args.clip_gradient is not None:

for model in models:

total_norm = clip_grad_norm_(model.parameters(), args.clip_gradient)

'''Update parameters'''

optimizer.step()

optimizer.zero_grad()

losses.update(loss.item(), visual_feature.size(0) * 10)

batch_time.update(time.time() - end_time)

end_time = time.time()

if device != torch.device("cpu"):

torch.cuda.synchronize(device)

return losses.avg, last_n_iter + total_step

def mi_first_forward(audio_feature, visual_feature, Encoder, Video_mi_net, Audio_mi_net, optimizer_video_mi_net, optimizer_audio_mi_net,is_train):

optimizer_video_mi_net.zero_grad()

optimizer_audio_mi_net.zero_grad()

_, video_club_feature, audio_encoder_result, \

video_vq, audio_vq, _, _, _ = Encoder(audio_feature, visual_feature, is_train)

video_club_feature = video_club_feature.detach()

audio_encoder_result = audio_encoder_result.detach()

video_vq = video_vq.detach()

audio_vq = audio_vq.detach()

lld_video_loss = -Video_mi_net.loglikeli(video_vq, video_club_feature)

if is_train:

lld_video_loss.backward()

optimizer_video_mi_net.step()

lld_audio_loss = -Audio_mi_net.loglikeli(audio_vq, audio_encoder_result)

if is_train:

lld_audio_loss.backward()

optimizer_audio_mi_net.step()

return optimizer_video_mi_net, lld_video_loss, optimizer_audio_mi_net, lld_audio_loss

def mi_second_forward(audio_feature, visual_feature, Encoder, Video_mi_net, Audio_mi_net, Decoder, is_train):

video_encoder_result, video_club_feature, audio_encoder_result, \

video_vq, audio_vq, audio_embedding_loss, video_embedding_loss, cmcm_loss = Encoder(audio_feature, visual_feature, is_train)

mi_video_loss = Video_mi_net.mi_est(video_vq, video_club_feature)

mi_audio_loss = Audio_mi_net.mi_est(audio_vq, audio_encoder_result)

video_recon_loss, audio_recon_loss, video_class, audio_class \

= Decoder(visual_feature, audio_feature, video_encoder_result, audio_encoder_result, video_vq, audio_vq)

return audio_embedding_loss, video_embedding_loss, mi_audio_loss, mi_video_loss, \

audio_recon_loss, video_recon_loss, audio_class, video_class, cmcm_loss

if __name__ == '__main__':

main()

about lr, I have try:

- single GPU’s lr

- single GPU’s lr * multiGPU’num, like lr * 3

- lr * sqer(3)

It seems like no.1 is better

single machine, multi processes