Update: I am not sure if this is a error due to mlflow, Azure Cluster, or PyTorch. Please check my issue followup here: [BUG] extra ghost runs due to failed connection retries · Issue #8238 · mlflow/mlflow · GitHub in summary, this problem doesn’t happen if use single node multi-GPU for DDP.

Any thought how to fix this WARNING? I am also not 100% sure about correctness of this DDP code for training and evaluation of CIFAR10 using pre-trained ResNet50 network. For example, I am not sure 100% about correctness of all_reduce here.

# Average the test accuracy across all processes

correct = torch.tensor(correct, dtype=torch.int8)

correct = correct.to(device)

torch.distributed.all_reduce(correct, op=torch.distributed.ReduceOp.SUM)

total = torch.tensor(total, dtype=torch.torch.int8)

total = total.to(device)

torch.distributed.all_reduce(total, op=torch.distributed.ReduceOp.SUM)

test_accuracy = 100 * correct / total

test_accuracy /= world_size

also, what about the loss and running loss? do we need to use all_reduce for them as well?

import time

import torch

import torch.distributed as dist

import torch.nn as nn

import torch.optim as optim

import torchvision

import torchvision.transforms as transforms

import matplotlib.pyplot as plt

import numpy as np

import mlflow

import os

import datetime

import configparser

import logging

import argparse

from PIL import Image

import ssl

ssl._create_default_https_context = ssl._create_unverified_context

start_time = time.time()

# Set the seed for reproducibility

torch.manual_seed(42)

# Set up the data loading parameters

batch_size = 128

num_epochs = 100

num_workers = 4

pin_memory = True

# Get the world size and rank to determine the process group

world_size = int(os.environ['WORLD_SIZE'])

world_rank = int(os.environ['RANK'])

local_rank = int(os.environ['LOCAL_RANK'])

is_distributed = world_size > 1

if is_distributed:

batch_size = batch_size // world_size

batch_size = max(batch_size, 1)

# Set the backend to NCCL for distributed training

dist.init_process_group(backend="nccl",

init_method="env://",

world_size=world_size,

rank=world_rank)

# Set the device to the current local rank

torch.cuda.set_device(local_rank)

device = torch.device('cuda', local_rank)

dist.barrier()

# Define the transforms for the dataset

transform_train = transforms.Compose([

transforms.RandomCrop(32, padding=4),

transforms.RandomHorizontalFlip(),

transforms.ToTensor(),

transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))

])

transform_test = transforms.Compose([

transforms.ToTensor(),

])

# Load the CIFAR-10 dataset

data_root = './data_' + str(world_rank)

train_dataset = torchvision.datasets.CIFAR10(root=data_root, train=True, download=True, transform=transform_train)

train_sampler = torch.utils.data.distributed.DistributedSampler(train_dataset, num_replicas=world_size, rank=world_rank)

train_loader = torch.utils.data.DataLoader(train_dataset, batch_size=batch_size, shuffle=(train_sampler is None), num_workers=num_workers, pin_memory=pin_memory, sampler=train_sampler)

test_dataset = torchvision.datasets.CIFAR10(root=data_root, train=False, download=True, transform=transform_test)

test_loader = torch.utils.data.DataLoader(test_dataset, batch_size=batch_size, shuffle=False, num_workers=num_workers, pin_memory=pin_memory)

# Define the ResNet50 model

model = torchvision.models.resnet50(pretrained=True)

num_features = model.fc.in_features

model.fc = nn.Linear(num_features, 10)

# Move the model to the GPU

model = model.to(device)

# Wrap the model with DistributedDataParallel

if is_distributed:

model = nn.parallel.DistributedDataParallel(model, device_ids=[local_rank], output_device=local_rank)

# Define the loss function and optimizer

criterion = nn.CrossEntropyLoss()

optimizer = optim.Adam(model.parameters(), lr=0.001)

# Train the model for the specified number of epochs

for epoch in range(num_epochs):

running_loss = 0.0

train_sampler.set_epoch(epoch) ### why is this line necessary??

for batch_idx, (inputs, labels) in enumerate(train_loader):

inputs, labels = inputs.to(device), labels.to(device)

optimizer.zero_grad()

outputs = model(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

running_loss += loss.item()

print('[Epoch %d] loss: %.3f' % (epoch + 1, running_loss))

# Log the loss and running loss as MLFlow metrics

mlflow.log_metric("loss", loss.item())

mlflow.log_metric("running loss", running_loss)

dist.barrier()

# Save the trained model

if world_rank == 0:

checkpoints_path = "train_checkpoints"

os.makedirs(checkpoints_path, exist_ok=True)

torch.save(model.state_dict(), '{}/{}-{}.pth'.format(checkpoints_path, 'resnet50_cifar10', world_rank))

mlflow.pytorch.log_model(model, "resnet50_cifar10_{}.pth".format(world_rank))

# mlflow.log_artifact('{}/{}-{}.pth'.format(checkpoints_path, 'resnet50_cifar10', world_rank), artifact_path="model_state_dict")

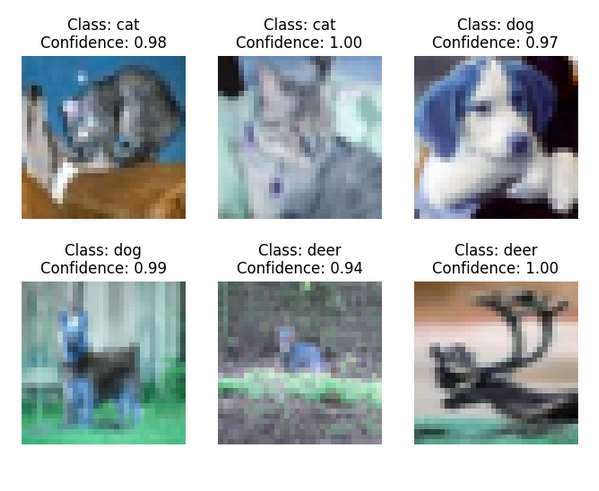

# Evaluate the model on the test set and save inference on 6 random images

correct = 0

total = 0

with torch.no_grad():

fig, axs = plt.subplots(2, 3, figsize=(8, 6), dpi=100)

axs = axs.flatten()

count = 0

for data in test_loader:

if count == 6:

break

inputs, labels = data

inputs, labels = inputs.to(device), labels.to(device)

outputs = model(inputs)

_, predicted = torch.max(outputs.data, 1)

total += labels.size(0)

correct += (predicted == labels).sum().item()

# Save the inference on the 6 random images

if count < 6:

image = np.transpose(inputs[0].cpu().numpy(), (1, 2, 0))

confidence = torch.softmax(outputs, dim=1)[0][predicted[0]].cpu().numpy()

class_name = test_dataset.classes[predicted[0]]

axs[count].imshow(image)

axs[count].set_title(f'Class: {class_name}\nConfidence: {confidence:.2f}')

axs[count].axis('off')

count += 1

# Average the test accuracy across all processes

correct = torch.tensor(correct, dtype=torch.int8)

correct = correct.to(device)

torch.distributed.all_reduce(correct, op=torch.distributed.ReduceOp.SUM)

total = torch.tensor(total, dtype=torch.torch.int8)

total = total.to(device)

torch.distributed.all_reduce(total, op=torch.distributed.ReduceOp.SUM)

test_accuracy = 100 * correct / total

test_accuracy /= world_size

print('Test accuracy: %.2f %%' % test_accuracy)

# Save the plot with the 6 random images and their predicted classes and prediction confidence

test_img_file_name = 'test_images_' + str(world_rank) + '.png'

plt.savefig(test_img_file_name)

# Log the test accuracy and elapsed time to MLflow

mlflow.log_metric("test accuracy", test_accuracy)

end_time = time.time()

elapsed_time = end_time - start_time

print('Elapsed time: ', elapsed_time)

mlflow.log_metric("elapsed time", elapsed_time)

# Save the plot with the 6 random images and their predicted classes and prediction confidence as an artifact in MLflow

image = Image.open(test_img_file_name)

image = image.convert('RGBA')

image_buffer = np.array(image)

image_buffer = image_buffer[:, :, [2, 1, 0, 3]]

image_buffer = np.ascontiguousarray(image_buffer)

artifact_file_name = "inference_on_test_images_" + str(world_rank) + ".png"

mlflow.log_image(image_buffer, artifact_file=artifact_file_name)

# End the MLflow run

if mlflow.active_run():

mlflow.end_run()

dist.destroy_process_group()

here’s part of my pipeline.yaml:

resources:

# instance_count: 1 # number of nodes

instance_count: 4

distribution:

type: pytorch

# process_count_per_instance: 1 # number of gpus

process_count_per_instance: 4

# NOTE: set env var if needed

environment_variables:

NCCL_DEBUG: "INFO" # adjusts the level of info from NCCL tests

# NCCL_TOPO_FILE: "/opt/microsoft/ndv4-topo.xml" # Use specific topology file for A100

# NCCL_IB_PCI_RELAXED_ORDERING: "1" # Relaxed Ordering can greatly help the performance of Infiniband networks in virtualized environments.

NCCL_IB_DISABLE: "1" # force disable infiniband (if set to "1")

# NCCL_NET_PLUGIN: "none" # to force NET/Plugin off (no rdma/sharp plugin at all)

# NCCL_NET: "Socket" # to force node-to-node comm to use Socket (slow)

NCCL_SOCKET_IFNAME: "eth0" # to force Socket comm to use eth0 (use NCCL_NET=Socket)

# NCCL_SOCKET_IFNAME: "lo"

# UCX_IB_PCI_RELAXED_ORDERING: "on"

# UCX_TLS: "tcp"

# UCX_NET_DEVICES: "eth0" # if you have Error: Failed to resolve UCX endpoint...

CUDA_DEVICE_ORDER: "PCI_BUS_ID" # ordering of gpus # do we need to uncomment this? why?

TORCH_DISTRIBUTED_DEBUG: "DETAIL"

and here’s the newly produced results:

b022059e50144c35858c014326950bf2000000:38:38 [0] NCCL INFO NCCL_SOCKET_IFNAME set by environment to eth0

/47505500-0004-0000-3130-444531303244/pci0004:00/0004:00:00.0/../max_link_speed, ignoring

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Topology detection : could not read /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/47505500-0004-0000-3130-444531303244/pci0004:00/0004:00:00.0/../max_link_width, ignoring

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Topology detection: network path /sys/devices/LNXSYSTM:00/LNXSYBUS:00/PNP0A03:00/device:07/VMBUS:01/000d3ae3-7594-000d-3ae3-7594000d3ae3 is not a PCI device (vmbus). Attaching to first CPU

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO KV Convert to int : could not find value of '' in dictionary, falling back to 60

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Attribute coll of node net not found

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO === System : maxWidth 5.0 totalWidth 12.0 ===

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO CPU/0 (1/1/1)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO + PCI[5000.0] - NIC/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO + NET[5.0] - NET/0 (0/0/5.000000)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO + PCI[12.0] - GPU/100000 (0)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO + PCI[12.0] - GPU/200000 (1)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO + PCI[12.0] - GPU/300000 (2)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO + PCI[12.0] - GPU/400000 (3)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO ==========================================

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO GPU/100000 :GPU/100000 (0/5000.000000/LOC) GPU/200000 (2/12.000000/PHB) GPU/300000 (2/12.000000/PHB) GPU/400000 (2/12.000000/PHB) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO GPU/200000 :GPU/100000 (2/12.000000/PHB) GPU/200000 (0/5000.000000/LOC) GPU/300000 (2/12.000000/PHB) GPU/400000 (2/12.000000/PHB) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO GPU/300000 :GPU/100000 (2/12.000000/PHB) GPU/200000 (2/12.000000/PHB) GPU/300000 (0/5000.000000/LOC) GPU/400000 (2/12.000000/PHB) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO GPU/400000 :GPU/100000 (2/12.000000/PHB) GPU/200000 (2/12.000000/PHB) GPU/300000 (2/12.000000/PHB) GPU/400000 (0/5000.000000/LOC) CPU/0 (1/12.000000/PHB) NET/0 (3/5.000000/PHB)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO NET/0 :GPU/100000 (3/5.000000/PHB) GPU/200000 (3/5.000000/PHB) GPU/300000 (3/5.000000/PHB) GPU/400000 (3/5.000000/PHB) CPU/0 (2/5.000000/PHB) NET/0 (0/5000.000000/LOC)

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Pattern 4, crossNic 0, nChannels 1, speed 5.000000/5.000000, type PHB/PHB, sameChannels 1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO 0 : NET/0 GPU/0 GPU/1 GPU/2 GPU/3 NET/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Pattern 1, crossNic 0, nChannels 1, speed 6.000000/5.000000, type PHB/PHB, sameChannels 1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO 0 : NET/0 GPU/0 GPU/1 GPU/2 GPU/3 NET/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Pattern 3, crossNic 0, nChannels 0, speed 0.000000/0.000000, type NVL/PIX, sameChannels 1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Tree 0 : -1 -> 0 -> 1/8/-1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Tree 1 : 4 -> 0 -> 1/-1/-1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 00/02 : 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 01/02 : 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Ring 00 : 15 -> 0 -> 1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Ring 01 : 15 -> 0 -> 1

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Trees [0] 1/8/-1->0->-1 [1] 1/-1/-1->0->4

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Setting affinity for GPU 0 to 0fff

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 00 : 15[400000] -> 0[100000] [receive] via NET/Socket/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 01 : 15[400000] -> 0[100000] [receive] via NET/Socket/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 00 : 0[100000] -> 1[200000] via direct shared memory

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 01 : 0[100000] -> 1[200000] via direct shared memory

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Connected all rings

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 01 : 0[100000] -> 4[100000] [send] via NET/Socket/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 00 : 8[100000] -> 0[100000] [receive] via NET/Socket/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 00 : 0[100000] -> 8[100000] [send] via NET/Socket/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Channel 01 : 4[100000] -> 0[100000] [receive] via NET/Socket/0

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO Connected all trees

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO threadThresholds 8/8/64 | 128/8/64 | 8/8/512

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO 2 coll channels, 2 p2p channels, 1 p2p channels per peer

b022059e50144c35858c014326950bf2000000:38:205 [0] NCCL INFO comm 0x14dab4001240 rank 0 nranks 16 cudaDev 0 busId 100000 - Init COMPLETE

b022059e50144c35858c014326950bf2000000:38:38 [0] NCCL INFO Launch mode Parallel

Downloading https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz to ./data_0/cifar-10-python.tar.gz

0%| | 0/170498071 [00:00<?, ?it/s]

0%| | 512000/170498071 [00:00<00:33, 5064354.10it/s]

2%|▏ | 3909632/170498071 [00:00<00:07, 21994007.18it/s]

...

97%|█████████▋| 165796864/170498071 [00:04<00:00, 58049136.87it/s]

170499072it [00:04, 38095858.41it/s] Extracting ./data_0/cifar-10-python.tar.gz to ./data_0

Files already downloaded and verified

[Epoch 1] loss: 572.919

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 2] loss: 456.929

[Epoch 3] loss: 371.837

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ProtocolError('Connection aborted.', ConnectionResetError(104, 'Connection reset by peer'))': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 4] loss: 378.591

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 5] loss: 328.165

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 6] loss: 317.507

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 7] loss: 286.207

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 8] loss: 303.266

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 9] loss: 274.409

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 10] loss: 286.861

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 11] loss: 257.423

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 12] loss: 269.233

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 13] loss: 254.974

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 14] loss: 258.292

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 15] loss: 235.025

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 16] loss: 249.847

[Epoch 17] loss: 238.604

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 18] loss: 215.929

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 19] loss: 210.027

WARNING:urllib3.connectionpool:Retrying (Retry(total=3, connect=4, read=4, redirect=5, status=5)) after connection broken by 'NewConnectionError('<urllib3.connection.HTTPSConnection object at 0x14db37800790>: Failed to establish a new connection: [Errno -3] Temporary failure in name resolution')': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 82] loss: 75.608

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

[Epoch 83] loss: 73.162

b022059e50144c35858c014326950bf2000000:38:209 [0] include/socket.h:423 NCCL WARN Net : Connection closed by remote peer 10.0.0.4<52726>

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO include/socket.h:445 -> 2

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO include/socket.h:457 -> 2

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:229 -> 2

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

b022059e50144c35858c014326950bf2000000:38:209 [0] include/alloc.h:48 NCCL WARN Cuda failure 'out of memory'

b022059e50144c35858c014326950bf2000000:38:209 [0] NCCL INFO bootstrap.cc:231 -> 1

b022059e50144c35858c014326950bf2000000:38:209 [0] bootstrap.cc:279 NCCL WARN [Rem Allocator] Allocation failed (segment 0, fd 126)

[Epoch 99] loss: 63.380

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

2023/05/17 09:13:35 WARNING mlflow.utils.environment: Encountered an unexpected error while inferring pip requirements (model URI: /tmp/tmpc4iktykx/model/data, flavor: pytorch), fall back to return ['torch==1.11.0', 'cloudpickle==2.0.0']. Set logging level to DEBUG to see the full traceback.

[Epoch 100] loss: 55.532

Test accuracy: -inf %

Elapsed time: 49817.131615400314

Here’s the inference:

As you see I have a WARNING that could have caused some problem.

[Epoch 99] loss: 63.380

WARNING:urllib3.connectionpool:Retrying (Retry(total=4, connect=5, read=4, redirect=5, status=5)) after connection broken by 'ReadTimeoutError("HTTPSConnectionPool(host='eastus2.api.azureml.ms', port=443): Read timed out. (read timeout=120)")': /mlflow/v1.0/subscriptions/number/resourceGroups/some_name/providers/Microsoft.MachineLearningServices/workspaces/some_name/api/2.0/mlflow/runs/log-metric

2023/05/17 09:13:35 WARNING mlflow.utils.environment: Encountered an unexpected error while inferring pip requirements (model URI: /tmp/tmpc4iktykx/model/data, flavor: pytorch), fall back to return ['torch==1.11.0', 'cloudpickle==2.0.0']. Set logging level to DEBUG to see the full traceback.