Hello everyone,

Thank you in advance for your time!

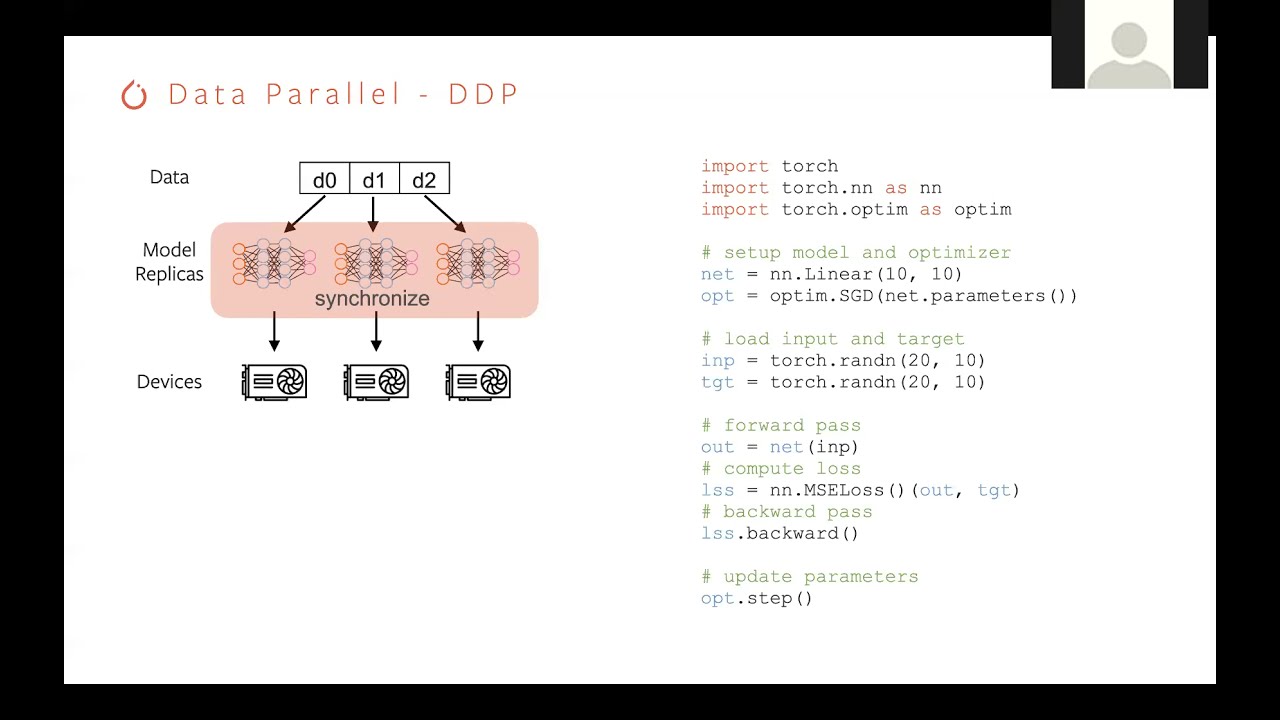

I am trying to wrap my head around all of the different distributed training libraries out there, specifically for multi node training. I understand that Pytorch DDP can operate in a multi-node fashion.

Sorry for the vague-ness here… I think I am just having trouble understanding the difference between:

- torch.DistributedDataParallel

- deepspeed

- horovod

- fairscale

- nvidia apex (apex.parallel.DistributedDataParallel)

- ray

- raySGD

- MPI (totally get this for big CPU cluster jobs from like 20 years ago… how does it fit in here?)

The closest I have come to understanding how everything connects is from this blog post:

https://medium.com/distributed-computing-with-ray/faster-and-cheaper-pytorch-with-raysgd-a5a44d4fd220

They say:

You can use one of the integrated tools for doing distributed training like Torch Distributed Data Parallel or tf.Distributed. While these are “integrated”, they are certainly not a walk in the park to use.

Torch’s AWS tutorial demonstrates the many setup steps you’re going to have to follow to simply get the cluster running, and Tensorflow 2.0 has a bunch of issues.

Maybe you might look at something like Horovod, but Horovod is going to require you to fight against antiquated frameworks like MPI and wait a long time for compilation when you launch.

I also watched this video which explains some of the differences:

I was wondering if someone who knows a lot more about this than I do could clear up how #1-#8 relate to each other! And bonus points if you have a good rule of thumb for when to use which one! My/our applications are pretty straightforward - (NLP stuff, image–>text stuff)… just have a ton of data and trying to speed things up by going multi-node… then ran into #1-#8 and got confused and figured maybe clearing it up would help others as well!

Thanks again everyone.