Hello! I am trying to build a network that reconstructs some images from a 1D vector. However the images are not squared (they are 15x27). What is the best way to do that? ConvTranspose2d seems to be able to return just squared images. Thank you!

This should not be the case:

conv = nn.ConvTranspose2d(1, 3, 4, 2)

x = torch.randn(1, 1, 15, 27)

out = conv(x)

print(out.shape)

> torch.Size([1, 3, 32, 56])

You could also define the parameters of your transposed convolution for each spatial dimension separately, if needed.

I am sorry if I explained that the wrong way. I start with a 1D vector and I want to end up with a 15x27 image. I don’t start with that image. So my input is (32,5), (the 32 is just the batch size) and the output should be (32, 2, 15, 27) so a batch of images with 2 channels and size 15x27.

There are of course multiple ways of achieving the desired output shape and this would be one:

x = torch.randn(32, 5)

x = x.unsqueeze(2).unsqueeze(2)

model = nn.Sequential(

nn.ConvTranspose2d(5, 2, (2, 2), 2),

nn.ConvTranspose2d(2, 2, (2, 4), 2),

nn.ConvTranspose2d(2, 2, (2, 3), 2),

nn.ConvTranspose2d(2, 2, (1, 3), 2),

)

out = model(x)

print(out.shape)

> torch.Size([32, 2, 15, 27])

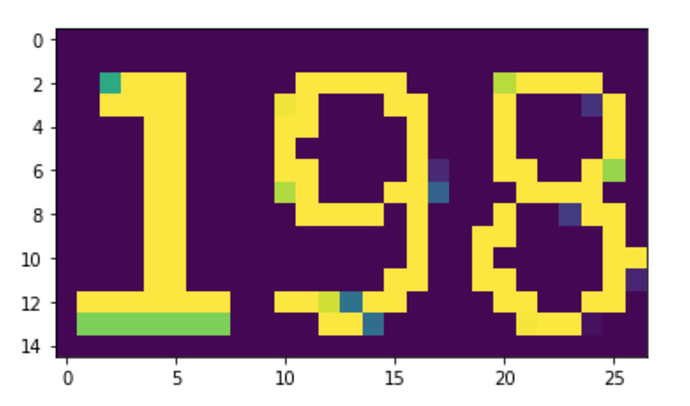

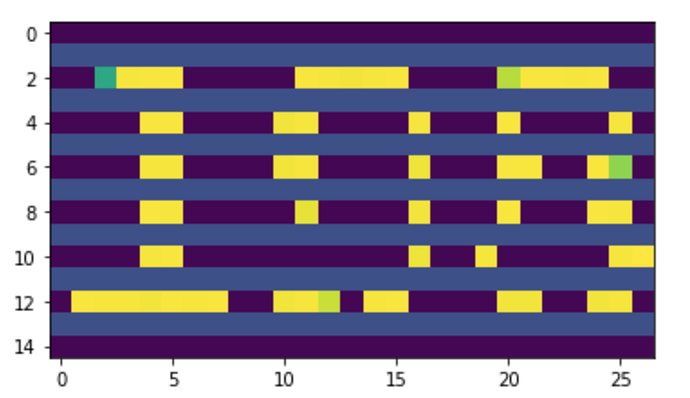

Thanks a lot for this! I tried it and I do get the right shape and the NN is training, however the images look a bit weird (I attached an example of input/output after the NN is trained). Using a similar architecture (which is basically an autoencoder), I get almost perfect reconstruction for square images. Also the error seems to be systematic i.e. if I train the NN with different initializations I get the same strides in the output, for all the images. Do you know what could cause this (I haven’t used non-square images before). Could it be because of the way the deconvolution is done (it could just as well be a bug in my code, but it is worth asking here)? Thank you!

Thanks for getting back!

I guess the pattern is created by the last nn.ConvTranspose2d layer, which uses a kernel height of 1 and a stride of 2 in both spatial dimensions.

This will skip every second row. Sorry for missing this.

You could of course change the overall architecture and try to use overlapping or neighboring kernels.

This is a quick and dirty fix using the current architecture and increasing the last kernel dimension:

model = nn.Sequential(

nn.ConvTranspose2d(5, 2, (2, 2), 2),

nn.ConvTranspose2d(2, 2, (2, 4), 2),

nn.ConvTranspose2d(2, 2, (2, 3), 2),

nn.ConvTranspose2d(2, 2, (2, 3), stride=2, padding=(1, 0), output_padding=(1, 0)),

)

Let me know, if that works better.

Thanks a lot! It works perfectly now!

Hi,

I would like to know how you are training your network.Are you using GAN type of approach?

I am doing a VAE approach actually.

hi @ptrblck how do you calculate the structure to reach that output?, for example, how can I modify your example to reach an output of torch.Size([32, 1, 101, 27]) or

torch.Size([32, 1, 101, 35])

What is the math/equations behind this?

Thank you very much!

The formula to calculate the output shape is given in the docs of nn.ConvTranspose2d.

You could calculate the approx. architecture manually (e.g. start with layers, which would double the spatial size) and “fine-tune” the layers later in case the final output size doesn’t match.

Note that you can add print statements into the forward method of your model to get the output shapes of intermediate tensors, which would make creating the model a bit easier.

Thank you very much @ptrblck , helps me a lot.