How do I write pseudo algorithm for any deep learning model?

I was going through few deep learning papers on graph and there is pseudo algorithm for network architecture, Example :

Algorithm 3 SAGE Model [11]

Require: Aˆ is a normalization of A

1: function SageModel(Aˆ, X, L)

2: Z ← X

3: for i = 1 to L do

4: Z ← σ(

Z AZˆ

W (i)

)

5: Z ← L2NormalizeRows(Z)

6: return Z

That is overall architecture described in paper.

I want to write one pseudo algorithm for one custom network, Network structure is very simple, It’s sentence classification using dynamic rnn.

Input is sentence [ batch_size x max_sentence_length x embedding_dim ]

labels are [ batch_size ]

network is send sentences to rnn and get the feature vectors after send this feature vector to one other nano network for attention part and get attention vector, after getting attention vector add one dense layer to reshape the feature vectors and get the probability values shape [ batch_size ]

So nano network is:

import numpy as np

#simple soft attention

def nano_network( logits, lstm_units ):

# just for example

logits_ = tf.reshape(logits,[-1, lstm_units])

attention_size = tf.get_variable(name='attention_size',

shape=[lstm_units,1],

dtype=tf.float32,

initializer=tf.random_uniform_initializer(-0.01,0.01))

attention_matmul = tf.matmul(logits_,attention_size)

output_reshape = tf.reshape(attention_matmul,[tf.shape(logits)[0],tf.shape(logits)[1],-1])

return tf.squeeze(output_reshape)

Simple rnn network :

import tensorflow as tf

#simple network

class Base_model(object):

def __init__(self):

tf.reset_default_graph()

# define placeholders

self.sentences = tf.placeholder(tf.float32, [12, 50, 10], name='sentences') # batch_size x max_sentence_length x dim

self.targets = tf.placeholder(tf.int32, [12], name='labels' )

with tf.variable_scope('dynamic_rnn') as scope:

cell = tf.nn.rnn_cell.LSTMCell(num_units=5, state_is_tuple=True)

outputs, _states = tf.nn.dynamic_rnn(cell, self.sentences, dtype=tf.float32)

#attention function

self.output_s = nano_network(outputs,5)

# simple linear projection

self.output = tf.layers.dense(self.output_s,2)

cross_entropy = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=self.targets,logits=self.output)

#loss calculation

loss = tf.reduce_mean(cross_entropy)

#train / network weights update

self.train = tf.train.AdamOptimizer().minimize(loss)

self.out = { 'loss':loss, 'train':self.train }

If someone want to run this code, here is random values for testing the code:

# #model train

# def rand_exec(model):

# with tf.Session() as sess:

# sess.run(tf.global_variables_initializer())

# for i in range(100):

# loss_ = sess.run(model.out,

# feed_dict = {

# model.sentences : np.random.randint(0, 10, [12,50,10]),

# model.targets : np.random.randint(0, 2, [12] )})

# print(loss_['loss'])

# # wdim, hdim, vocab_size, num_labels,threshold,relation_embeddings,relation_dim,t,adj_file

# if __name__ == '__main__':

# model = Base_model()

# out = rand_exec(model)

Now, I want to write pseudo algorithm for this simple network.

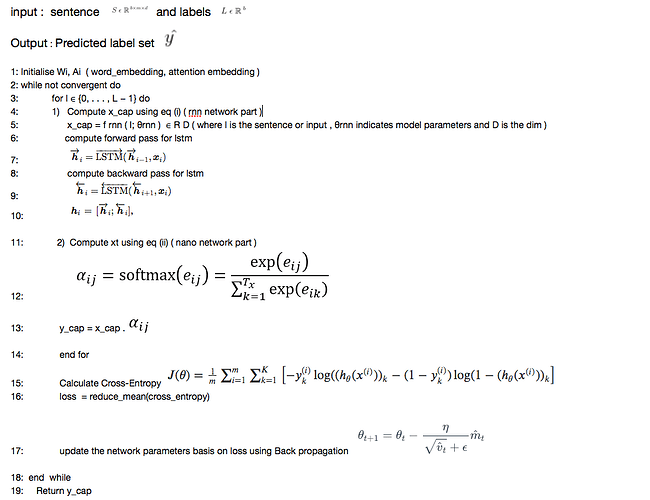

I tried to convert this network architecture into pseudo algorithm, Here is my pseudo code:

[Here is textual format of this algorithm][4]

But I am confuse if it is correct or not like the parameters update rule and other things in algorithm.

I would greatly appreciate it if anyone kindly give me some advice on how to write pseudo algorithm for this network architecture.

I know this is not pytorch code but I am not getting help from anywhere and since it’s more deep learning question than tensorflow question, that’s why I posted here.

Thank you !