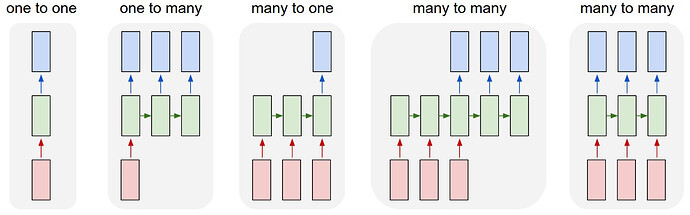

My current LSTM has a many-to-one structure (please see the pic below). On the top of LSTM layer, I added one dropout layer and one linear layer to get the final output, so in PyTorch it looks like

self.dropout = nn.Dropout(0.2)

self.ln = nn.Linear(hidden_size, 1)

h, c = self.lstm(x)

last_h = self.dropout(h[:, -1, :])

out = self.ln(last_h)

Now, I want to modify my LSTM to simulate many-to-many; let’s say many-to-10.

Q1. Is it correct if I add one dropout and one dense layer for the 10-cell outputs? Something like

self.dropout = nn.Dropout(0.2)

self.ln = nn.Linear(hidden_sizex10, 10)

h, c = self.lstm(x)

last_h = self.dropout(h[:, -10, :])

out = self.ln(last_h)

Q2. Or, should I add one dropout and dense layer for each cell output (i.e., 10 dropout and 10 dense layers)? In this case, how I can implement them in the code?