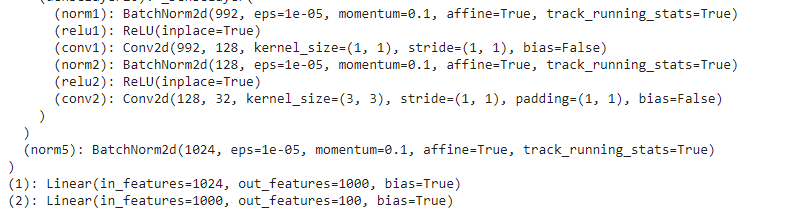

This is the last layers of densenet from the pytorch hub models. I added an extra linear layer after the given linear layer in the following way.

import torch.nn as nn

model = torch.hub.load('pytorch/vision:v0.6.0', 'densenet121', pretrained=True)

modules = list(model.children())

new = nn.Sequential(*modules,

nn.Linear(model.classifier.out_features, 100))

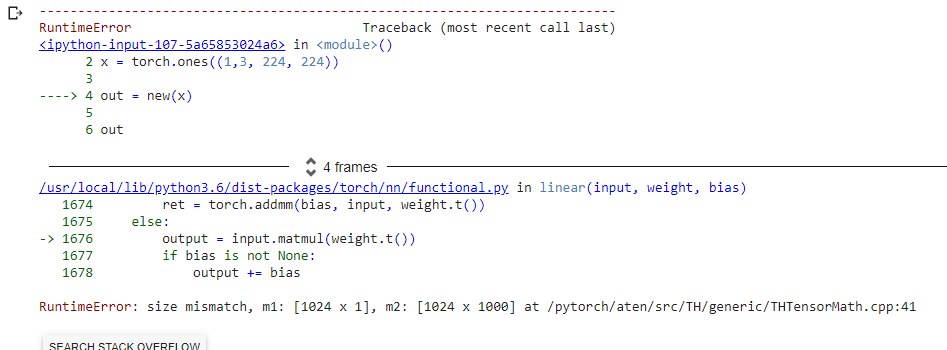

But when I use

x = torch.ones((1,3, 224, 224))

out = new(x)

out

I get the followin error-

Why so? Aren’t the dimensions right here?

Similar things happen when adding a new layer to Alexnet too. but it works if I edit the dimension of the existing layers.