Hello,

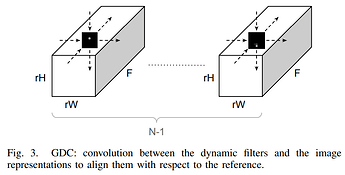

I’m implementing the CNN to align multiple video frames using the dynamic filter network (DFN).

In my implementation, the DFN estimates the convolution filters of size [batch x 3 (depth, # of input frames) x filter_height x filter_width] and the estimated filters are used to align three input frames by a convolution.

Given the tensor of size [8 x 128 x 3 x 32 x 32] , which is generated using nn.Conv3D and three input frames, and the estimated filters of size [8 x 3 x 9 x 9], I tried to use nn.functional.conv3d function to perform the depthwise convolution to the feature maps of each input frame as

# estimated filters

filters = torch.unsqueeze(filters, dim=1) # [8, 1, 3, 9, 9]

filters = filters.repeat(1, 128, 1, 1, 1) # [8, 128, 3, 9, 9]

filters = filters.permute(1, 0, 2, 3, 4) # [128, 8, 3, 9, 9]

f_sh = filters.shape

filters = torch.reshape(filters, (1, f_sh[0] * f_sh[1], f_sh[2], f_sh[3], f_sh[4])) # [1, 128*8, 3, 9, 9]

filters = filters.permute(1, 0, 2, 3, 4) # [128*8, 1, 3, 9, 9]

# input tensor to be aligned

sh = x_in.shape # [8, 128, 3, 32, 32]

x_in = torch.reshape(x_in, (1, sh[0] * sh[1], sh[2], sh[3], sh[4])) # [1, 8*128, 3, 32, 32]

temp_out = nn.functional.conv3d(x_in, filters, stride=(1, 1, 1), padding=(0, 2, 2), groups=128*8)

It works, but I want to know that it’s correctly implemented or not.

Could you give me an idea to effectively perform the depthwise convolution using nn.functional.conv3d?

Thank you.