Hi, I started to learn PyTorch after doing Machine learning course in Coursera.

Reading the docs I find that Im unfamiliar with the terms and the logic of the nn creation.

For example this code:

def forward(self, x):

x = self.pool(F.relu(self.conv1(x)))

x = self.pool(F.relu(self.conv2(x)))

x = x.view(-1, 16 * 5 * 5)

x = F.relu(self.fc1(x))

x = F.relu(self.fc2(x))

x = self.fc3(x)

return x

What is relu? why we need to call it twice? why we need to use x.view after this? why we need to call fc1/2/3? I dont get the intuition of this forward function.

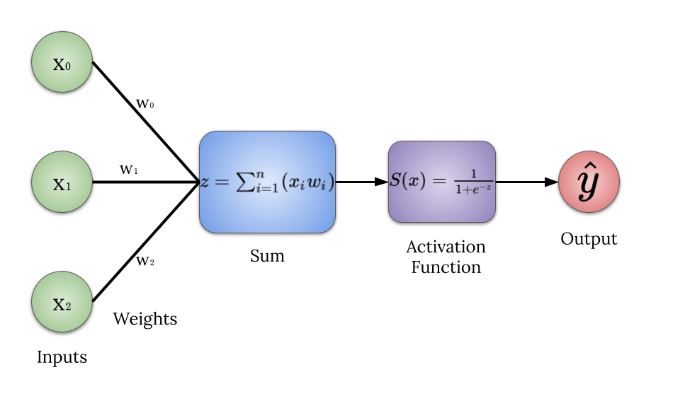

I fond example of a “simple” nn design with a forward function like this which is how I was thought nn is built:

#activation function ==> S(x) = 1/1+e^(-x)

def sigmoid(self, x, deriv=False):

if deriv == True:

return x * (1 - x)

return 1 / (1 + np.exp(-x))

# data will flow through the neural network.

def feed_forward(self):

self.hidden = self.sigmoid(np.dot(self.inputs, self.weights))

I also dont understand the init function:

def __init__(self):

super(Net, self).__init__()

self.conv1 = nn.Conv2d(3, 6, 5)

self.pool = nn.MaxPool2d(2, 2)

self.conv2 = nn.Conv2d(6, 16, 5)

self.fc1 = nn.Linear(16 * 5 * 5, 120)

self.fc2 = nn.Linear(120, 84)

self.fc3 = nn.Linear(84, 10)

I tried to go for the docs but Im still clueless about the logic flow of the init function. Why we need t occall Conv2d and then MaxPool2d and then Linear.

What channels means? why do we need channels? I know the simple design on input layer, hidden layer and output layer.

The simple code for nn as I learned it is found here:

https://towardsdatascience.com/inroduction-to-neural-networks-in-python-7e0b422e6c24

Does PyTorch have a detailed explanation for each line of the nn design, explaining to a total newbie in PyTorch.

Thanks.