I am trying to train a network on a system with multiple GPUs, but I keep getting this error and I can’t seem to track down what the issue might be. The NCCL logs (below) don’t seem to have the failing broadcast op listed for either rank?

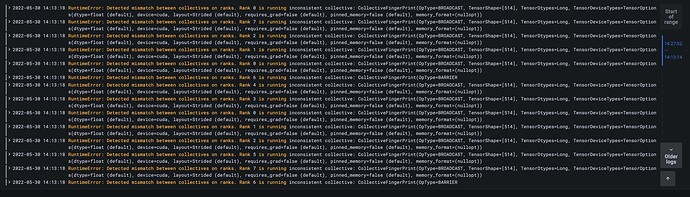

RuntimeError: Detected mismatch between collectives on ranks. Rank 0 is running inconsistent collective: CollectiveFingerPrint(OpType=BROADCAST, TensorShape=[34112], TensorDtypes=Float, TensorDeviceTypes=TensorOptions(dtype=float (default), device=cuda, layout=Strided (default), requires_grad=false (default), pinned_memory=false (default), memory_format=(nullopt))

In both ranks the inputs have the same dimensions ([18, 3, 512, 512]) and the exact same model is being used in both ranks.

The model is constructed like this

torch.distributed.init_process_group(backend=torch.distributed.Backend.NCCL, rank=rank, world_size=world_size)

model = CenterNet(

num_classes=config.dataset.num_classes,

use_fpn=config.model.use_fpn,

use_separable_conv=config.model.use_separable_conv,

device=torch_device,

).to(torch_device)

model = torch.nn.SyncBatchNorm.convert_sync_batchnorm(model)

model = torch.nn.parallel.DistributedDataParallel(

model, device_ids=[rank], output_device=rank, find_unused_parameters=False

)

Sometimes the error appears on a all_reduce op that seems to related to SyncBatchNorm (I will try and get a stack trace for this next time it shows up)

Can anyone suggest how I can get more information about what is failing?

I am using a docker image based on pytorch/pytorch:1.11.0-cuda11.3-cudnn8-devel and I have set the following environment variables

os.environ["MASTER_ADDR"] = "localhost"

os.environ["MASTER_PORT"] = find_free_port()

os.environ["NCCL_DEBUG"] = "INFO"

os.environ["TORCH_CPP_LOG_LEVEL"] = "INFO"

os.environ["TORCH_DISTRIBUTED_DEBUG"] = "DETAIL"

os.environ["NCCL_DEBUG_SUBSYS"] = "COLL"

os.environ["NCCL_DEBUG_FILE"] = "/output/nccl_logs.txt"

NCCL logs show

f85219af9a4b:13:13 [1] misc/ibvwrap.cc:63 NCCL WARN Failed to open libibverbs.so[.1]

f85219af9a4b:13:13 [1] NCCL INFO Broadcast: opCount 0 sendbuff 0x7f23a9ffe200 recvbuff 0x7f23a9ffe200 count 5712 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO Broadcast: opCount 0 sendbufff85219af9a4b:12:12

[0] NCCL INFO Broadcast: opCount 0 sendbuff 0x7fb120ebc000 recvbuff 0x7fb120ebc000 count 9197968 datatype 0 op f85219af9a4b:13:13 [1] NCCL INFO Broadcast: opCount 0 sendbuff 0x7f23a9de9600 recvbuff 0x7f23a9de9600 count 416 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO Broadcast: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO Broadcast: opCount 0 sendbuff 0x7fb121b77400 recvbuff 0x7fb121b77400 count 136448 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO Broadcast: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO Broadcast: opCount 0 sendbuff 0x7fb119dfea00 recvbuff 0x7fb119dfea00 count 416 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbuff 0x7f23a9ffec00 recvbuff 0x7f23a9ffee00 count 260 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbuff 0x7f23a9fff000 recvbuff 0x7f23a9fff400 count 260 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbuff 0x7f23a9fffe00 recvbuff 0x7f23b1df0800 count 132 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbuff 0x7f23b1df1000 recvbuff 0x7f23b1df1400 count 772 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df2800 recvbuff 0x7fb121df2c00 count 772 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df3a00 recvbuff 0x7fb121df3c00 count 196 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df4600 recvbuff 0x7fb121df4c00 count 1156 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df5e00 recvbuff 0x7fb121df6400 count 1156 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df4200 recvbuff 0x7fb121df4400 count 196 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df7c00 recvbuff 0x7fb121df8200 count 1156 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df8c00 recvbuff 0x7fb121df9200 count 1156 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df7800 recvbuff 0x7fb121dfa400 count 260 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121dfb200 recvbuff 0x7fb121dfba00 count 1540 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121dfd000 recvbuff 0x7fb121dfd800 count 1540 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121dfb000 recvbuff 0x7fb121dfee00 count 260 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f96000 recvbuff 0x7fb121f96800 count 1540 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f97e00 recvbuff 0x7fb121f98600 count 1540 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121dff800 recvbuff 0x7fb121f99c00 count 260 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f9a000 recvbuff 0x7fb121f9a800 count 1540 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f9ba00 recvbuff 0x7fb121f9c200 count 1540 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df2a00 recvbuff 0x7fb121df2e00 count 516 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bf8200 recvbuff 0x7fb121bf9000 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bfb600 recvbuff 0x7fb121bfc400 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bf7c00 recvbuff 0x7fb121bfea00 count 516 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f9d000 recvbuff 0x7fb121f9de00 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa0400 recvbuff 0x7fb121fa1200 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bff400 recvbuff 0x7fb121bff800 count 516 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa3a00 recvbuff 0x7fb121fa4800 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df7c00 recvbuff 0x7fb121fa6e00 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbuff 0x7f23b1df1800 recvbuff 0x7f23b1df7000 count 516 datatype 0 op 0 root 0 comm 0x556062238000 [nranks=2] stream 0x55607b5f17d0

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121faa200 recvbuff 0x7fb121fab000 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121df8c00 recvbuff 0x7fb121fad600 count 3076 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121dfbe00 recvbuff 0x7fb121fa9600 count 772 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb0c95c0000 recvbuff 0x7fb0c95c1400 count 4612 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f96000 recvbuff 0x7fb0c95c4e00 count 4612 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121dfee00 recvbuff 0x7fb121dfd000 count 772 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f99c00 recvbuff 0x7fb0c95c8800 count 4612 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f9ba00 recvbuff 0x7fb0c95cb800 count 4612 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bf7c00 recvbuff 0x7fb121f97e00 count 772 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa6e00 recvbuff 0x7fb121bfb600 count 4612 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bf8c00 recvbuff 0x7fb121fa0400 count 4612 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121bfea00 recvbuff 0x7fb121df7c00 count 1284 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa3a00 recvbuff 0x7fb0c15d0000 count 7684 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121f9ce00 recvbuff 0x7fb0c15d3e00 count 7684 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb0c95c6e00 recvbuff 0x7fb0c95c4e00 count 1284 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb0c95c8800 recvbuff 0x7fb0c15d7c00 count 7684 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa0400 recvbuff 0x7fb0c15dba00 count 7684 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb0c95ca800 recvbuff 0x7fb121df7c00 count 1284 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa0400 recvbuff 0x7fb121f9ba00 count 7684 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121fa3a00 recvbuff 0x7fb121f9ba00 count 7684 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb0c95c4e00 recvbuff 0x7fb121fa4a00 count 2564 datatype 0 op

f85219af9a4b:13:13 [1] NCCL INFO AllGather: opCount 0 sendbufff85219af9a4b:12:12 [0] NCCL INFO AllGather: opCount 0 sendbuff 0x7fb121ffd600 recvbuff 0x7fb0c14a0000 count 10244 datatype 0 op 0 root 0 comm 0x55f6e0728000 [nranks=2] stream 0x55f6df1e9900

Other output (stdout/stderr)

[I debug.cpp:47] [c10d] The debug level is set to DETAIL.

[I debug.cpp:47] [c10d] The debug level is set to DETAIL.

[I socket.cpp:417] [c10d - debug] The server socket will attempt to listen on an IPv6 address.

[I socket.cpp:462] [c10d - debug] The server socket is attempting to listen on [::]:37861.

[I socket.cpp:522] [c10d] The server socket has started to listen on [::]:37861.

[I socket.cpp:582] [c10d - debug] The client socket will attempt to connect to an IPv6 address of (localhost, 37861).

[I socket.cpp:649] [c10d - trace] The client socket is attempting to connect to [localhost]:37861.

[I socket.cpp:725] [c10d] The client socket has connected to [localhost]:37861 on [localhost]:47436.

[I socket.cpp:276] [c10d - debug] The server socket on [::]:37861 has accepted a connection from [localhost]:47436.

[I socket.cpp:582] [c10d - debug] The client socket will attempt to connect to an IPv6 address of (localhost, 37861).

[I socket.cpp:649] [c10d - trace] The client socket is attempting to connect to [localhost]:37861.

[I socket.cpp:725] [c10d] The client socket has connected to [localhost]:37861 on [localhost]:47438.

[I socket.cpp:276] [c10d - debug] The server socket on [::]:37861 has accepted a connection from [localhost]:47438.

[I socket.cpp:582] [c10d - debug] The client socket will attempt to connect to an IPv6 address of (localhost, 37861).

[I socket.cpp:649] [c10d - trace] The client socket is attempting to connect to [localhost]:37861.

[I socket.cpp:725] [c10d] The client socket has connected to [localhost]:37861 on [localhost]:47440.

[I socket.cpp:276] [c10d - debug] The server socket on [::]:37861 has accepted a connection from [localhost]:47440.

[I socket.cpp:582] [c10d - debug] The client socket will attempt to connect to an IPv6 address of (localhost, 37861).

[I socket.cpp:649] [c10d - trace] The client socket is attempting to connect to [localhost]:37861.

[I socket.cpp:725] [c10d] The client socket has connected to [localhost]:37861 on [localhost]:47442.

[I socket.cpp:276] [c10d - debug] The server socket on [::]:37861 has accepted a connection from [localhost]:47442.

[I ProcessGroupNCCL.cpp:588] [Rank 1] ProcessGroupNCCL initialized with following options:

NCCL_ASYNC_ERROR_HANDLING: 0

NCCL_DESYNC_DEBUG: 0

NCCL_BLOCKING_WAIT: 0

TIMEOUT(ms): 1800000

USE_HIGH_PRIORITY_STREAM: 0

NCCL_DEBUG: INFO

[I ProcessGroupNCCL.cpp:733] [Rank 1] NCCL watchdog thread started!

[I ProcessGroupNCCL.cpp:588] [Rank 0] ProcessGroupNCCL initialized with following options:

NCCL_ASYNC_ERROR_HANDLING: 0

NCCL_DESYNC_DEBUG: 0

NCCL_BLOCKING_WAIT: 0

TIMEOUT(ms): 1800000

USE_HIGH_PRIORITY_STREAM: 0

NCCL_DEBUG: INFO

[I ProcessGroupNCCL.cpp:733] [Rank 0] NCCL watchdog thread started!

NCCL version 2.10.3+cuda11.3

[I reducer.cpp:110] Reducer initialized with bucket_bytes_cap: 26214400 first_bucket_bytes_cap: 1048576

[I reducer.cpp:110] Reducer initialized with bucket_bytes_cap: 26214400 first_bucket_bytes_cap: 1048576

[I logger.cpp:218] [Rank 0]: DDP Initialized with:

broadcast_buffers: 1

bucket_cap_bytes: 26214400

find_unused_parameters: 0

gradient_as_bucket_view: 0

has_sync_bn: 1

is_multi_device_module: 0

iteration: 0

num_parameter_tensors: 174

output_device: 0

rank: 0

total_parameter_size_bytes: 9061520

world_size: 2

backend_name: nccl

bucket_sizes: 8005776, 1055744

cuda_visible_devices: N/A

device_ids: 0

dtypes: float

initial_bucket_size_limits: 26214400, 1048576

master_addr: localhost

master_port: 37861

module_name: CenterNet

nccl_async_error_handling: N/A

nccl_blocking_wait: N/A

nccl_debug: INFO

nccl_ib_timeout: N/A

nccl_nthreads: N/A

nccl_socket_ifname: N/A

torch_distributed_debug: DETAIL

[I logger.cpp:218] [Rank 1]: DDP Initialized with:

broadcast_buffers: 1

bucket_cap_bytes: 26214400

find_unused_parameters: 0

gradient_as_bucket_view: 0

has_sync_bn: 1

is_multi_device_module: 0

iteration: 0

num_parameter_tensors: 174

output_device: 1

rank: 1

total_parameter_size_bytes: 9061520

world_size: 2

backend_name: nccl

bucket_sizes: 8005776, 1055744

cuda_visible_devices: N/A

device_ids: 1

dtypes: float

initial_bucket_size_limits: 26214400, 1048576

master_addr: localhost

master_port: 37861

module_name: CenterNet

nccl_async_error_handling: N/A

nccl_blocking_wait: N/A

nccl_debug: INFO

nccl_ib_timeout: N/A

nccl_nthreads: N/A

nccl_socket_ifname: N/A

torch_distributed_debug: DETAIL

Rank 1: Step 0: inputs: torch.Size([18, 3, 512, 512])

Epoch 0/300000

Batch 0/3257: Rank 0: Step 0: inputs: torch.Size([18, 3, 512, 512])

[I logger.cpp:382] [Rank 0 / 2] [iteration 1] Training CenterNet unused_parameter_size=0

Avg forward compute time: 1156088960

Avg backward compute time: 0

Avg backward comm. time: 0

Avg backward comm/comp overlap time: 0

[I ProcessGroupNCCL.cpp:735] [Rank 0] NCCL watchdog thread terminated normally

[I ProcessGroupNCCL.cpp:735] [Rank 1] NCCL watchdog thread terminated normally

Traceback (most recent call last):

File "./centernet.py", line 357, in <module>

torch.multiprocessing.spawn(

File "/opt/conda/lib/python3.8/site-packages/torch/multiprocessing/spawn.py", line 240, in spawn

return start_processes(fn, args, nprocs, join, daemon, start_method='spawn')

File "/opt/conda/lib/python3.8/site-packages/torch/multiprocessing/spawn.py", line 198, in start_processes

while not context.join():

File "/opt/conda/lib/python3.8/site-packages/torch/multiprocessing/spawn.py", line 160, in join

raise ProcessRaisedException(msg, error_index, failed_process.pid)

torch.multiprocessing.spawn.ProcessRaisedException:

-- Process 0 terminated with the following error:

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/site-packages/torch/multiprocessing/spawn.py", line 69, in _wrap

fn(i, *args)

File "/workspace/centernet.py", line 196, in train_ddp_model

y_pred = model(inputs).to(torch_device)

File "/opt/conda/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl

return forward_call(*input, **kwargs)

File "/opt/conda/lib/python3.8/site-packages/torch/nn/parallel/distributed.py", line 955, in forward

self._sync_buffers()

File "/opt/conda/lib/python3.8/site-packages/torch/nn/parallel/distributed.py", line 1602, in _sync_buffers

self._sync_module_buffers(authoritative_rank)

File "/opt/conda/lib/python3.8/site-packages/torch/nn/parallel/distributed.py", line 1606, in _sync_module_buffers

self._default_broadcast_coalesced(authoritative_rank=authoritative_rank)

File "/opt/conda/lib/python3.8/site-packages/torch/nn/parallel/distributed.py", line 1627, in _default_broadcast_coalesced

self._distributed_broadcast_coalesced(

File "/opt/conda/lib/python3.8/site-packages/torch/nn/parallel/distributed.py", line 1543, in _distributed_broadcast_coalesced

dist._broadcast_coalesced(

RuntimeError: Detected mismatch between collectives on ranks. Rank 0 is running inconsistent collective: CollectiveFingerPrint(OpType=BROADCAST, TensorShape=[34112], TensorDtypes=Float, TensorDeviceTypes=TensorOptions(dtype=float (default), device=cuda, layout=Strided (default), requires_grad=false (default), pinned_memory=false (default), memory_format=(nullopt))