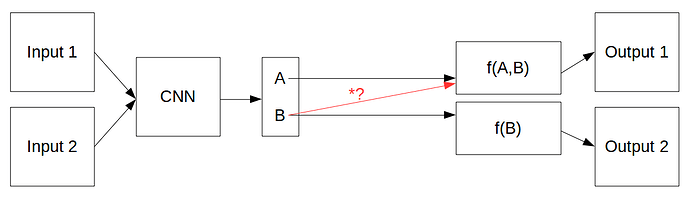

I have a quick question about this…say you had an autoencoder like below that uses two learned parameters A and B.

Say along the top decoder branch you don’t want to backprop over B, you only want to use it as a fixed input to f(A,B)…would you want to use B.detach() in place of *? or would that prevent the bottom branch from learning over B because you have completely detached it from the computational graph?

This is a circumstance where I feel like using B.clone().detach() would still allow learning over B in the lower branch and allow B as input to the top branch as a fixed input without requires_grad.