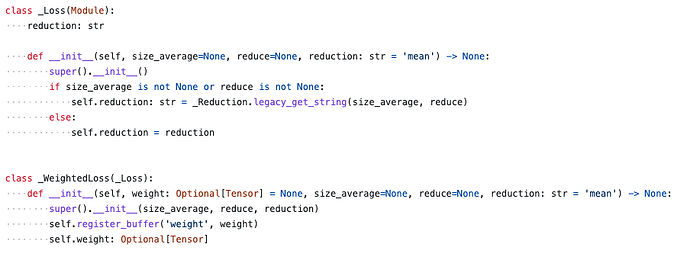

In the implementation of pytorch, the following two classes are defined in torch.nn.modules.loss:

I don’t understand the code here, but few losses like L1Loss inherits the class _Loss while some inherit the _WeightedLoss class.

I want to know how does a loss function that inherits _WeightedLoss different from the one that inherits _Loss?

Should I really care about it or as a user of the library, it is completely irrelevant to me?

okay. I think I figured it out.

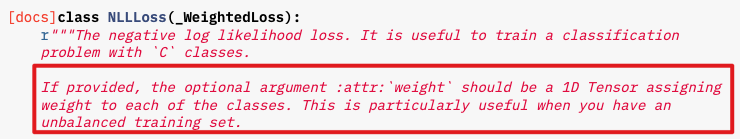

I just found this.

so this _WeightedLoss class is for the losses like Negative Log Likelihood loss and Binary Cross Entropy loss which provide a measure for probabilities and so, need to obtain values from all the classes of the input, to generate the corresponding probabilities for each class. The additional weight parameter would provide a weight to a certain class.

Whereas _Loss is for simple loss metrics between input and target values, like an L1 or L2 loss which is calculated only between an input value and the ground truth.