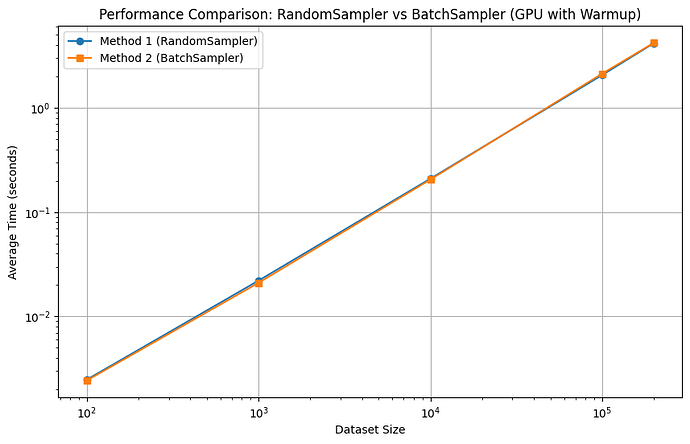

This is what I got on colab.

Code to reproduce:

import torch

from torch.utils.data import Dataset, DataLoader, RandomSampler, BatchSampler

import time

import matplotlib.pyplot as plt

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

print(f"Using device: {device}")

class DummyDataset(Dataset):

def __init__(self, size):

self.size = size

def __len__(self):

return self.size

def __getitem__(self, idx):

return torch.tensor([idx], device=device)

def warmup(dataset_size, num_samples, batch_size):

dataset = DummyDataset(dataset_size)

random_sampler = RandomSampler(dataset, num_samples=num_samples, replacement=False)

dataloader = DataLoader(dataset, batch_size=batch_size, sampler=random_sampler)

for _ in range(5): # Perform 5 warmup iterations

for batch in dataloader:

_ = batch.sum()

torch.cuda.synchronize()

def test_performance(dataset_size, num_samples, batch_size, num_iterations=10):

dataset = DummyDataset(dataset_size)

times_method1 = []

times_method2 = []

for _ in range(num_iterations):

# Method 1: Using RandomSampler

torch.cuda.synchronize()

start_time = time.time()

random_sampler = RandomSampler(dataset, num_samples=num_samples, replacement=False)

dataloader_1 = DataLoader(dataset, batch_size=batch_size, sampler=random_sampler)

for batch in dataloader_1:

_ = batch.sum()

torch.cuda.synchronize()

times_method1.append(time.time() - start_time)

# Method 2: Using BatchSampler

torch.cuda.synchronize()

start_time = time.time()

random_sampler_2 = RandomSampler(dataset, num_samples=num_samples, replacement=False)

batch_sampler = BatchSampler(random_sampler_2, batch_size=batch_size, drop_last=False)

dataloader_2 = DataLoader(dataset, batch_sampler=batch_sampler)

for batch in dataloader_2:

_ = batch.sum()

torch.cuda.synchronize()

times_method2.append(time.time() - start_time)

return sum(times_method1) / num_iterations, sum(times_method2) / num_iterations

# Test with increasing dataset sizes

dataset_sizes = [100, 1000, 10000, 100000, 200000]

batch_size = 32

num_samples_ratio = 0.8

print("Performing warmup...")

warmup(max(dataset_sizes), int(min(dataset_sizes) * num_samples_ratio), batch_size)

print("Warmup complete. Starting tests...")

results_method1 = []

results_method2 = []

faster_counts = {"Method 1": 0, "Method 2": 0}

for size in dataset_sizes:

num_samples = int(size * num_samples_ratio)

time1, time2 = test_performance(size, num_samples, batch_size)

results_method1.append(time1)

results_method2.append(time2)

if time1 < time2:

faster = "Method 1"

faster_counts["Method 1"] += 1

else:

faster = "Method 2"

faster_counts["Method 2"] += 1

print(f"Dataset size: {size}, Method 1: {time1:.6f}s, Method 2: {time2:.6f}s")

print(f"Faster method: {faster}")

print()

print("Overall Summary:")

if faster_counts["Method 1"] > faster_counts["Method 2"]:

print(f"Method 1 (RandomSampler) was faster in {faster_counts['Method 1']} out of {len(dataset_sizes)} tests.")

elif faster_counts["Method 2"] > faster_counts["Method 1"]:

print(f"Method 2 (BatchSampler) was faster in {faster_counts['Method 2']} out of {len(dataset_sizes)} tests.")

else:

print("Both methods were equally fast overall.")

plt.figure(figsize=(10, 6))

plt.plot(dataset_sizes, results_method1, label='Method 1 (RandomSampler)', marker='o')

plt.plot(dataset_sizes, results_method2, label='Method 2 (BatchSampler)', marker='s')

plt.xscale('log')

plt.yscale('log')

plt.xlabel('Dataset Size')

plt.ylabel('Average Time (seconds)')

plt.title('Performance Comparison: RandomSampler vs BatchSampler (GPU with Warmup)')

plt.legend()

plt.grid(True)

plt.show()