Hi, I’m using this approximation example for a sin wave as basis to learning approximation. I’m new to PyTorch… Why do different BATCH_SIZE values significantly change results?

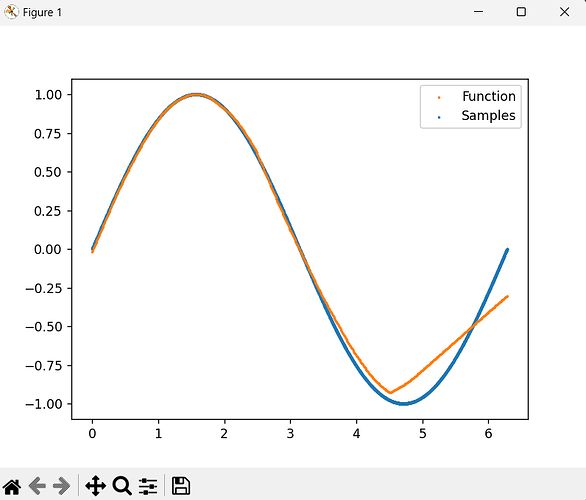

Batch size 512:

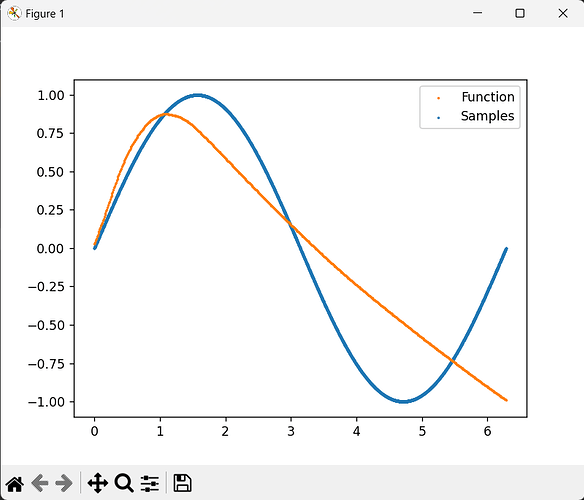

Batch size 10000 (all data points):

I am posting the code below but first I’ll explain the changes I made from the original:

All random seeds are static, disabled shuffling:

torch.manual_seed(41)

if torch.cuda.is_available():

torch.cuda.manual_seed_all(41)

np.random.seed(41)

...

X_train, X_val, y_train, y_val = map(torch.tensor, train_test_split(X, y, test_size=0.2, random_state=41, shuffle=False))

Learning rate changed to 1e-4, X size changed to 10**4 and changed MAX_EPOCH to 20.

I also added a plot to chart the approximation for all values in the range of X, to show the difference

Full code is below…

Thanks!

import torch

import numpy as np

import matplotlib.pyplot as plt

from torch import nn, optim

from torch.utils.data import TensorDataset, DataLoader

from sklearn.model_selection import train_test_split

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

LR = 1e-4

MAX_EPOCH = 20

BATCH_SIZE = 10**4

class SineApproximator(nn.Module):

def __init__(self):

super(SineApproximator, self).__init__()

self.regressor = nn.Sequential(nn.Linear(1, 1024),

nn.ReLU(inplace=True),

nn.Linear(1024, 1024),

nn.ReLU(inplace=True),

nn.Linear(1024, 1))

def forward(self, x):

output = self.regressor(x)

return output

torch.manual_seed(41)

if torch.cuda.is_available():

torch.cuda.manual_seed_all(41)

np.random.seed(41)

X = np.random.rand(10**4) * 2 * np.pi

y = np.sin(X)

X_train, X_val, y_train, y_val = map(torch.tensor, train_test_split(X, y, test_size=0.2, shuffle=False, random_state=41))

train_dataloader = DataLoader(TensorDataset(X_train.unsqueeze(1), y_train.unsqueeze(1)), batch_size=BATCH_SIZE,

pin_memory=True, shuffle=True)

val_dataloader = DataLoader(TensorDataset(X_val.unsqueeze(1), y_val.unsqueeze(1)), batch_size=BATCH_SIZE,

pin_memory=True, shuffle=True)

model = SineApproximator().to(device)

optimizer = optim.Adam(model.parameters(), lr=LR)

criterion = nn.MSELoss(reduction="mean")

train_loss_list = list()

val_loss_list = list()

for epoch in range(MAX_EPOCH):

print("epoch %d / %d" % (epoch + 1, MAX_EPOCH))

model.train()

# training loop

temp_loss_list = list()

for X_train, y_train in train_dataloader:

X_train = X_train.type(torch.float32).to(device)

y_train = y_train.type(torch.float32).to(device)

optimizer.zero_grad()

score = model(X_train)

loss = criterion(input=score, target=y_train)

loss.backward()

optimizer.step()

temp_loss_list.append(loss.detach().cpu().numpy())

temp_loss_list = list()

for X_train, y_train in train_dataloader:

X_train = X_train.type(torch.float32).to(device)

y_train = y_train.type(torch.float32).to(device)

score = model(X_train)

loss = criterion(input=score, target=y_train)

temp_loss_list.append(loss.detach().cpu().numpy())

avg_loss = np.average(temp_loss_list)

train_loss_list.append(avg_loss)

print("\ttrain loss: %.5f" % train_loss_list[-1])

# build a np array with all X values between their min and max values at 0.01 intervals, each value in its own array:

model.eval()

X_all = torch.tensor(np.arange(X.min(), X.max(), 0.01)).type(torch.float32).unsqueeze(1).to(device)

y_prediction = model(X_all)

y_prediction = y_prediction.detach().cpu().numpy().flatten()

original = plt.scatter(X, y, s=1)

predicted = plt.scatter(X_all.detach().cpu().numpy(), y_prediction, s=1)

plt.legend((predicted, original), ("Function", "Samples"))

plt.waitforbuttonpress()