Hi all,

I wonder if there is any practical way to assess/measure the level of privacy that a trained ML model has using Pytorch?

I know that there are different techniques which helps to guarantee certain level of privacy to the ML models during training. For example, during training, we can apply DP-SGD algorithm to clip the gradients and add some form of noise in terms of Laplacian or Gaussian Distribution (e.g. Pytorch’s Opacus lib) . Or some other ensemble techniques like PATE has been suggested in literature for ensuring the privacy of the models.

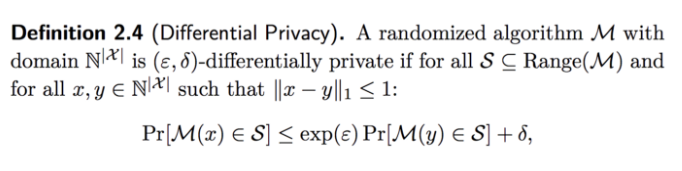

But my question is: Assuming I have simple DNN-based classifier (e.g. digit classifier) which I have normally trained, how can I quantitatively measure the model’s privacy in terms of epsilon , delta values based on formal definition of differential privacy represented below?

Best Wishes,

Jarvico