Hello,

I’m working on implementing a GAN, and have based my GAN on the DCGAN Tutorial here at Pytorch. In my problem the input does not have the same dimensions (which in the tutorial is 64x64), rather I would like it to work with input of dim. 61x250, or something like that. My problem occur when I increase the number of columns in the input image, as the discriminator network then outputs more numbers. I would like the discriminator to output one number per sample in the mini-batch, but for some reason the number of numbers in the output increase as the size of the input image increase.

The code for the discriminator network:

‘’’

class Discriminator(nn.Module):

def __init__(self, ngpu, nc, ndf):

super(Discriminator, self).__init__()

self.ngpu = ngpu

self.main = nn.Sequential(

# input is (nc) x 64 x 64

nn.Conv2d(nc, ndf, 4, 2, 1, bias=False),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf) x 32 x 32

nn.Conv2d(ndf, ndf * 2, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 2),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*2) x 16 x 16

nn.Conv2d(ndf * 2, ndf * 4, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 4),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*4) x 8 x 8

nn.Conv2d(ndf * 4, ndf * 8, 4, 2, 1, bias=False),

nn.BatchNorm2d(ndf * 8),

nn.LeakyReLU(0.2, inplace=True),

# state size. (ndf*8) x 4 x 4

nn.Conv2d(ndf * 8, 1, 4, 1, 0, bias=False),

nn.Sigmoid()

)

def forward(self, input):

return self.main(input)

‘’’

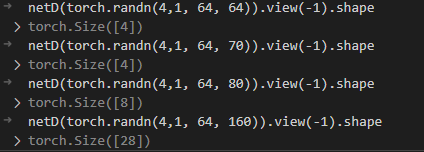

Screenshot from the VSCode-debugger where I’m illustrating that the dimention of the output increase when I add more columns to the input image.

I hope someone are able to see what I’m doing wrong. Thank you!