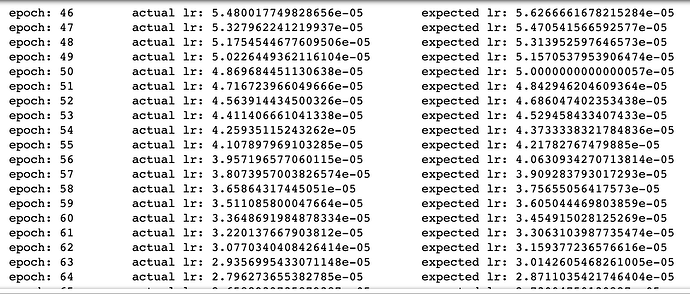

the discontinuity impacts all LR values computed from epoch 46 to epoch 100.

indeed, see what this quick snippet gave:

for i, val in enumerate(lr_first+lr):

if val != lr_expected[i]:

print(f'epoch: {i+1} \t actual lr: {val} \t expected lr: {lr_expected[i]}')