Hello

I hope you all are doing well.

Could you please help me to understand the following strange behavior of Pytorch DDP.

I have two models that I train using one optimizer. When I run the training on one machine

that has two Gpus there are no problems what so ever. It runs using both nccl and gloo.

On the other hand, It hangs when I use one machine with four Gpus using nccl. The training hangs when I warp each of the models with DDP. as the following code.

model = nn.parallel.DistributedDataParallel(model,

device_ids=[gpu],broadcast_buffers=False, find_unused_parameters=True)

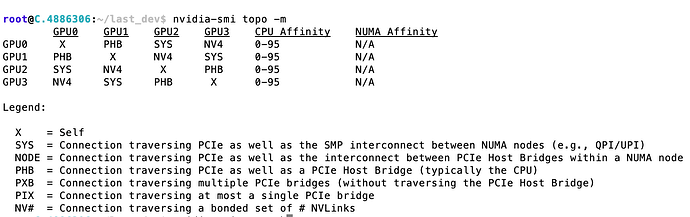

When I switch to gloo, it runs but its extremely slow. The Gpus configurations as follows.

I tried to use sub set of two Gpus out of the four but it hangs as well at different line of the code.

Thank you very much