I am trying to train a video classification model. I wrote a custom video dataset which essentially reads pre-extracted video frames from SSD. I want to train on a cluster of GPU machines with 4 GPU per node.

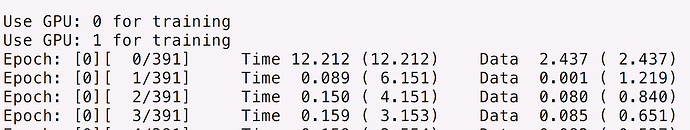

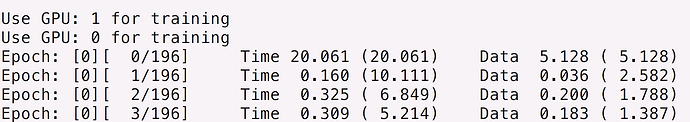

While training on 1 machine with 4 GPUs, I have following observations under two settings

Case 1. DistributedDataParallel: with 4 threads for a machine (1 thread per GPU) the data loading time for the first batch of every epoch is a lot (~110 seconds)

Case 2. DataParallel: with 4 threads for the machine, the data loading time is significantly lower (for first batch of every epoch) than Case 1 (~1.5 seconds)

I still want to use DistributedDataParallel as I want to train on multiple machines. But the extra 110 seconds every epoch is too much. How should I improve Distributed setting?

Logs for reference.

Dataparallel 4 threads

Epoch: [0][0/7508] Time 13.270 (13.270) Data 1.521 (1.521) Loss 6.2721 (6.2721) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][10/7508] Time 0.265 (1.459) Data 0.000 (0.138) Loss 17.9221 (17.1892) Acc@1 0.000 (0.284) Acc@5 0.000 (2.273)

Epoch: [0][20/7508] Time 0.265 (0.890) Data 0.000 (0.077) Loss 20.7100 (14.7189) Acc@1 0.000 (0.149) Acc@5 0.000 (1.786)

DistributedDataparallel 4 threads 1 thread each gpu

Epoch: [0][0/7508] Time 117.339 (117.339) Data 114.749 (114.749) Loss 6.3962 (6.3962) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][0/7508] Time 117.070 (117.070) Data 110.291 (110.291) Loss 6.3759 (6.3759) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][0/7508] Time 117.479 (117.479) Data 114.120 (114.120) Loss 6.3918 (6.3918) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][0/7508] Time 116.495 (116.495) Data 112.885 (112.885) Loss 6.0654 (6.0654) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][10/7508] Time 0.248 (10.814) Data 0.000 (10.262) Loss 13.6280 (14.8321) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][10/7508] Time 0.248 (10.870) Data 0.000 (10.030) Loss 12.6716 (16.3162) Acc@1 12.500 (1.136) Acc@5 12.500 (2.273)

Epoch: [0][10/7508] Time 0.252 (10.904) Data 0.000 (10.375) Loss 6.9328 (14.4093) Acc@1 0.000 (1.136) Acc@5 25.000 (3.409)

Epoch: [0][10/7508] Time 0.251 (10.891) Data 0.000 (10.432) Loss 12.2168 (13.2482) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

Epoch: [0][20/7508] Time 0.252 (5.813) Data 0.000 (5.260) Loss 6.3584 (13.0522) Acc@1 0.000 (0.595) Acc@5 0.000 (1.190)

Epoch: [0][20/7508] Time 0.254 (5.831) Data 0.000 (5.440) Loss 7.1645 (12.1273) Acc@1 0.000 (0.595) Acc@5 0.000 (1.786)

Epoch: [0][20/7508] Time 0.250 (5.825) Data 0.000 (5.470) Loss 6.9019 (12.8164) Acc@1 0.000 (0.595) Acc@5 0.000 (0.595)

Epoch: [0][20/7508] Time 0.252 (5.784) Data 0.000 (5.381) Loss 6.9181 (11.9140) Acc@1 0.000 (0.000) Acc@5 0.000 (0.000)

For training script I am using a modified version of https://github.com/pytorch/examples/blob/master/imagenet/main.py